How AI Detectors Work: Complete Guide to Detecting AI-Generated Content in 2025

The Growing Need for AI Detection

Artificial intelligence has revolutionized content creation, with tools like ChatGPT, Claude, and Gemini generating human-quality text in seconds. Students complete essays instantly, marketers produce thousands of articles effortlessly, and professionals draft reports with AI assistance. This explosion of AI-generated content has created an urgent need for reliable detection—schools need to identify AI-written essays, publishers must verify content authenticity, and platforms require tools to detect automated spam and misinformation.

AI detectors represent the technological response to this challenge. These sophisticated systems analyze text to determine whether humans or artificial intelligence created it, examining patterns invisible to human readers. Understanding how AI detectors work has become essential for educators evaluating student submissions, content creators ensuring originality, businesses maintaining quality standards, and anyone navigating the increasingly blurred line between human and machine-generated content.

The accuracy and reliability of AI detection technology directly impact critical decisions—academic integrity determinations, content monetization eligibility, hiring decisions based on writing samples, and legal proceedings involving content authenticity. Yet AI detectors remain imperfect, with false positives flagging human writing as AI-generated and sophisticated techniques evading detection. This comprehensive guide reveals the technical mechanisms behind AI detection, examines real-world accuracy, explores limitations and challenges, and provides practical guidance for both detecting AI content and understanding detection results.

What is an AI Detector? Understanding the Technology

An AI detector (also called AI content detector or AI writing detector) is a software tool that analyzes text to determine the probability that artificial intelligence, rather than a human, generated it. These systems use machine learning algorithms trained to recognize patterns, linguistic features, and statistical properties characteristic of AI-generated versus human-written content.

Core Function and Purpose

AI detectors serve as authenticity verification systems for written content. Just as plagiarism detectors compare text against existing sources, AI detectors analyze inherent characteristics distinguishing machine from human writing. Rather than checking for copied content, these tools identify telltale signs of AI generation patterns.

Primary Applications:

Academic Integrity: Schools and universities use AI detectors to identify students submitting AI-written essays, maintaining educational standards requiring original student work.

Content Verification: Publishers, platforms, and media organizations verify content authenticity, ensuring human authorship where required or disclosed AI assistance where present.

Quality Assurance: Businesses employing writers use AI detection to ensure deliverables meet human-authorship requirements and contract terms.

Platform Moderation: Social media and content platforms identify AI-generated spam, fake reviews, automated misinformation campaigns, and bot-generated comments.

Legal and Professional Contexts: Courts, licensing boards, and professional organizations verify document authenticity in contexts where AI-generated content raises concerns.

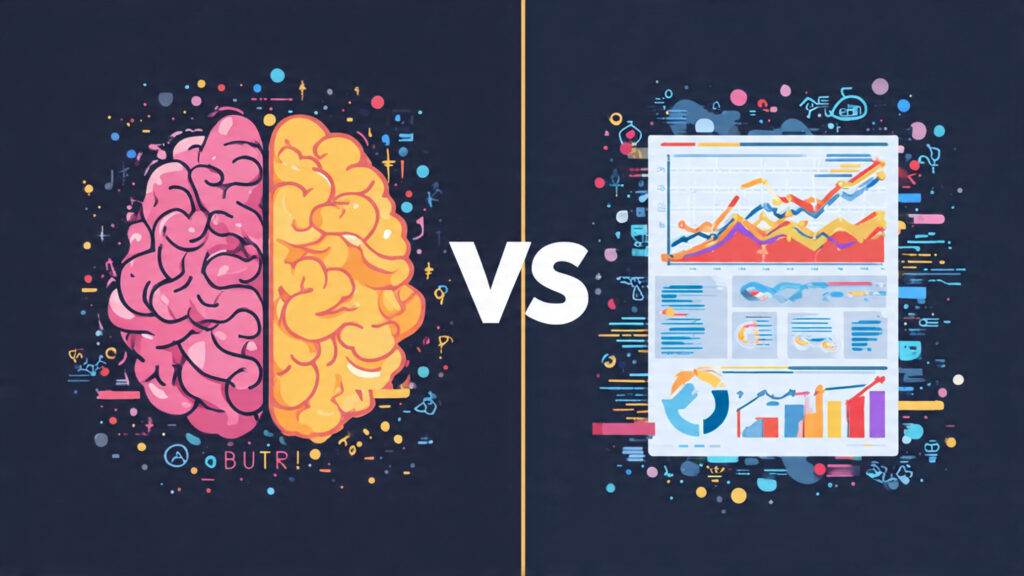

How AI Detectors Differ from Plagiarism Checkers

Understanding this distinction clarifies what AI detectors actually do:

Plagiarism Detectors:

- Compare text against existing content databases

- Identify copied or closely paraphrased material

- Check for proper citation and attribution

- Flag unoriginal content from known sources

- Example: Turnitin, Copyscape, Grammarly plagiarism checker

AI Detectors:

- Analyze inherent text characteristics and patterns

- Identify statistical signatures of AI generation

- Don’t compare against external sources

- Flag content showing machine-generated patterns

- Example: GPTZero, Originality.ai, Winston AI

Key Difference: AI detectors analyze how text was written (human patterns vs. AI patterns), while plagiarism checkers identify what was copied (matching existing sources).

The Challenge: Distinguishing Human from AI Writing

Modern language models generate remarkably human-like text, making detection technically challenging. AI writing exhibits:

High Grammatical Accuracy: Fewer errors than typical human writing Coherent Structure: Logical flow and organization Contextual Relevance: Appropriate vocabulary and topic knowledge Natural Language: Sentences that sound authentically human

This quality makes simple rule-based detection ineffective. AI detectors must identify subtle statistical patterns, linguistic fingerprints, and stylistic characteristics that distinguish even sophisticated AI text from human authorship.

The Science Behind AI Detection: Core Mechanisms

AI detectors employ multiple analytical techniques to identify machine-generated content. Understanding these mechanisms reveals both capabilities and limitations.

Mechanism 1: Perplexity Analysis

Perplexity measures how “surprised” a language model is by text. Lower perplexity indicates predictable, expected word sequences; higher perplexity suggests unexpected, creative language choices.

How It Works:

Language models assign probabilities to word sequences based on training data. When analyzing text, detectors calculate how predictable each word choice is given previous context. AI-generated text typically shows lower perplexity because AI models naturally select high-probability words—the most statistically likely next word.

Example Analysis:

Human Writing: “The thunderous storm unleashed its fury, drenching everything in sight.”

- “Thunderous” before “storm” – moderately predictable

- “Unleashed its fury” – creative expression, less predictable

- “Drenching” – specific word choice among many options

- Result: Higher perplexity from creative, varied word choices

AI Writing: “The heavy rain caused significant flooding in the area.”

- “Heavy” before “rain” – highly predictable

- “Caused significant” – common phrase structure

- “Flooding in the area” – standard phrasing

- Result: Lower perplexity from predictable, common patterns

Detection Signal: Consistently low perplexity across an entire text suggests AI generation, as humans naturally include more unpredictable word choices, creative expressions, and idiosyncratic phrasing.

Limitations: Advanced prompting techniques can increase AI output perplexity, and some human writing (especially technical or formal writing) naturally shows lower perplexity, creating false positives.

Mechanism 2: Burstiness Analysis

Burstiness measures variation in sentence length, complexity, and structure throughout text. Humans write with natural variability—some sentences short and punchy, others long and complex. AI often produces more uniform output.

How It Works:

Detectors analyze sentence length distribution, syntactic complexity variation, paragraph structure consistency, and rhythm changes throughout text. Human writing typically shows higher burstiness with deliberate variation for emphasis, pacing, and style.

Example Analysis:

Human Writing:

- Sentence 1: 8 words (short)

- Sentence 2: 23 words (long, complex)

- Sentence 3: 12 words (medium)

- Sentence 4: 5 words (very short, emphasis)

- High burstiness: Significant variation

AI Writing:

- Sentence 1: 15 words (medium)

- Sentence 2: 17 words (medium)

- Sentence 3: 14 words (medium)

- Sentence 4: 16 words (medium)

- Low burstiness: Consistent uniformity

Detection Signal: Text with consistent sentence length, uniform complexity, and regular structure suggests AI generation. Human writers naturally vary pacing and structure for rhetorical effect.

Limitations: Technical writing, scientific papers, and formal business documents often require consistent structure, reducing natural burstiness and potentially triggering false positives.

Mechanism 3: Statistical Pattern Recognition

AI detectors analyze numerous statistical features distinguishing human from machine-generated text:

N-gram Frequency Analysis: Examining common word sequences (bigrams, trigrams) that appear with different frequencies in human versus AI writing.

Vocabulary Diversity: Measuring lexical richness and repetition patterns. AI sometimes shows specific vocabulary biases from training data.

Transition Probability Patterns: Analyzing how likely specific word transitions are. AI models favor high-probability transitions consistently.

Syntactic Pattern Distribution: Examining sentence structure variety. AI may overuse certain grammatical constructions while underusing others.

Semantic Coherence Measures: Evaluating meaning consistency and topical flow. AI sometimes maintains more uniform semantic density than human writing.

Mechanism 4: Machine Learning Classifiers

Modern AI detectors employ trained machine learning models that learn to distinguish human from AI writing through exposure to millions of examples.

Training Process:

- Data Collection: Gather large datasets of confirmed human-written text and confirmed AI-generated text

- Feature Extraction: Convert text into numerical features (perplexity, burstiness, statistical measures)

- Model Training: Train classifiers (Random Forests, Neural Networks, Support Vector Machines) to distinguish classes

- Validation: Test accuracy on held-out datasets not seen during training

- Deployment: Apply trained models to analyze new, unknown text

Popular Classification Approaches:

Supervised Learning Models: Train on labeled examples of human and AI text, learning discriminative features. Most commercial AI detectors use this approach.

Neural Network Classifiers: Deep learning models capturing complex patterns through multiple layers. Can detect subtle linguistic fingerprints.

Ensemble Methods: Combine multiple detection techniques, improving reliability through diverse analytical approaches.

Training Challenges: Requires large amounts of labeled data, must update regularly as AI models improve, and faces difficulty with new AI systems not represented in training data.

Mechanism 5: Stylometric Analysis

Stylometry analyzes writing style characteristics unique to individual authors or consistent patterns in AI outputs.

Analyzed Features:

Lexical Features: Word choice preferences, vocabulary sophistication, preferred synonyms Syntactic Features: Sentence structure preferences, clause usage patterns, grammatical constructions Readability Metrics: Flesch-Kincaid scores, grade-level assessments, complexity measures Punctuation Patterns: Comma usage, dash preferences, sentence ending patterns Rhetorical Devices: Use of questions, exclamations, emphasis techniques

Detection Application: AI writing often shows specific stylometric signatures—certain readability score ranges, particular punctuation patterns, or consistent complexity levels that differ from human writing’s natural variation.

Mechanism 6: Watermarking Detection

Some AI systems embed invisible watermarks in generated text—subtle patterns detectable by specialized tools but imperceptible to human readers.

How Watermarking Works:

During text generation, the AI model slightly biases token selection according to a secret pattern. For example, after certain words, the model prefers specific next-word choices following a predetermined sequence. This creates a statistical signature verifiable by detection tools with the watermarking key.

Example Implementation:

OpenAI has researched watermarking approaches where generated text contains detectable patterns. The model slightly favors certain word sequences in ways that accumulate into statistically significant signatures across longer texts.

Advantages:

- High accuracy when watermarking is present

- Resistant to paraphrasing and minor editing

- Can definitively prove AI generation

Limitations:

- Only works for AI systems implementing watermarking

- Not universally adopted across AI platforms

- Can be removed through substantial rewriting

- Raises privacy and transparency concerns

Popular AI Detection Tools: How They Work

Examining leading AI detectors reveals different technical approaches and varying accuracy rates.

GPTZero: Academic-Focused Detection

GPTZero, developed by Princeton student Edward Tian, specifically targets academic integrity applications.

Technical Approach:

Perplexity Measurement: Analyzes how predictable text is to language models Burstiness Detection: Evaluates sentence-level complexity variation Document-Level Analysis: Considers entire text holistically rather than just sentence-by-sentence AI Probability Score: Provides percentage likelihood of AI generation

Strengths:

- Free tier available for educators

- Specifically trained on academic writing samples

- Provides detailed sentence-level analysis

- Designed for educational context

Accuracy Reports:

- Claims 98% accuracy on testing datasets

- Independent tests show 70-85% accuracy in real-world conditions

- Higher false positive rates on ESL student writing

- Performs better on longer text samples (300+ words)

Best Use Cases: Academic essay evaluation, student writing assessment, educational integrity monitoring

Originality.AI: Content Marketing Focus

Originality.AI targets content creators, publishers, and SEO professionals.

Technical Approach:

Multi-Model Detection: Tests against multiple AI systems (GPT-3, GPT-4, Claude, others) Plagiarism Integration: Combines AI detection with plagiarism checking Credit-Based Pricing: Pay-per-scan model for professional use Readability Analysis: Includes content quality metrics beyond just AI detection

Strengths:

- High accuracy on marketing and blog content

- Detects mixed human-AI content

- Provides confidence scores per sentence

- Regular updates for new AI models

Accuracy Reports:

- Claims 96% accuracy for GPT-3 detection

- 83-94% accuracy on GPT-4 in independent tests

- Lower accuracy on heavily edited AI content

- Best performance on unedited AI outputs

Best Use Cases: Content verification for publishers, freelance writer output checking, SEO content authenticity

Winston AI: Enterprise Solution

Winston AI focuses on enterprise and educational institution needs.

Technical Approach:

Proprietary NLP Models: Custom-trained neural networks Multi-Language Support: Detects AI in multiple languages beyond English API Access: Integration capabilities for institutional systems Bulk Processing: Analyze thousands of documents efficiently

Strengths:

- Enterprise-grade security and privacy

- Handles large-scale implementation

- Customizable for institutional needs

- Detailed reporting and analytics

Accuracy Reports:

- 99.6% accuracy claimed on benchmark datasets

- 80-92% real-world accuracy in independent evaluations

- Strong performance on formal writing

- Struggles with creative or informal content

Best Use Cases: University-wide implementation, corporate compliance, large-scale content verification

Turnitin AI Detector: Academic Standard

Turnitin, the plagiarism detection leader, added AI detection to their platform.

Technical Approach:

Integrated Platform: Combined plagiarism and AI detection Massive Training Data: Leverages years of academic writing samples Institutional Trust: Established credibility in education Detailed Reports: Highlights suspected AI-generated passages

Strengths:

- Already widely adopted in education

- Familiar interface for educators

- Combined plagiarism and AI checking

- Institutional credibility and support

Accuracy Reports:

- Claims 98% true positive rate (correct AI identification)

- 0.1% false positive rate on their testing

- Independent tests show 60-80% real-world accuracy

- Better performance on longer academic papers

Best Use Cases: Higher education institutions, academic integrity offices, established educational systems

Content at Scale AI Detector: Free Tool

Content at Scale AI Detector offers free AI detection for general use.

Technical Approach:

Completely Free: No credit system or account required Predictability Scoring: Analyzes text predictability patterns Human Content Score: Inverse metric showing human likelihood Sentence Highlighting: Visual indicators of AI probability

Strengths:

- No cost barrier for casual use

- Simple, accessible interface

- Quick results without registration

- Transparent scoring methodology

Limitations:

- Lower accuracy than paid alternatives (60-75%)

- Basic feature set

- May struggle with sophisticated AI text

- Limited support and updates

Best Use Cases: Quick checks, preliminary screening, general curiosity, budget-constrained users

Real-World Accuracy: Testing AI Detectors

Understanding real-world performance requires examining independent testing beyond vendor claims.

Controlled Testing Results

Independent researchers have tested leading AI detectors under controlled conditions:

Stanford Study (2023): Tested GPTZero, Originality.AI, and Turnitin on dataset of 500 human-written and 500 AI-generated academic essays:

Results:

- GPTZero: 72% accuracy, 19% false positive rate

- Originality.AI: 78% accuracy, 12% false positive rate

- Turnitin: 68% accuracy, 8% false positive rate

Key Finding: All detectors showed significant false positive rates, incorrectly flagging human writing as AI-generated, particularly for non-native English speakers.

False Positive Problem: Human Writing Flagged as AI

Perhaps the most concerning limitation of AI detectors is incorrectly identifying human writing as AI-generated.

Common False Positive Triggers:

Non-Native English Speakers: ESL students writing in clear, grammatically correct English often trigger detectors because their writing may use more predictable word choices and simpler sentence structures.

Formal Academic Writing: Technical papers, scientific reports, and formal essays naturally show lower burstiness and higher predictability, characteristics detectors associate with AI.

Well-Edited Content: Multiple revision rounds can smooth out natural writing variation, making edited human work appear AI-generated.

Formulaic Writing: Following strict templates or style guides (legal documents, technical specifications) produces consistent patterns resembling AI output.

Real-World Impact: Students falsely accused of AI use face serious consequences—failing grades, academic integrity violations, disciplinary action—despite writing authentically. This creates significant ethical concerns around detector reliability.

False Negative Problem: AI Content Evading Detection

Conversely, AI detectors fail to identify AI-generated content with concerning frequency:

Evasion Techniques:

Human Editing: Light editing of AI-generated text by adding personal anecdotes, varying sentence structure, or adjusting word choices significantly reduces detection rates.

Paraphrasing Tools: Running AI text through paraphrasing software or asking the AI to rewrite itself creates versions that evade detection.

Strategic Prompting: Carefully crafted prompts instructing AI to write in specific styles, include varied sentence structures, or mimic human imperfections reduce detectability.

Mixed Content: Blending AI-generated and human-written sections confuses detectors that analyze full documents.

Newer AI Models: Detection tools lag behind latest AI systems. GPT-4 text often evades detectors trained primarily on GPT-3 outputs.

Accuracy by Content Type

Detection accuracy varies significantly based on content characteristics:

High Accuracy (80-95%):

- Long-form content (1000+ words)

- Unedited AI outputs from GPT-3

- Generic informational content

- Formal academic essays

- Straightforward explanatory text

Medium Accuracy (60-80%):

- Short-form content (under 500 words)

- Lightly edited AI text

- GPT-4 generated content

- Technical or specialized writing

- Creative or narrative content

Low Accuracy (40-60%):

- Heavily edited AI text

- Mixed human-AI content

- Informal conversational writing

- Content from newer AI models

- Strategic prompt-engineered outputs

Limitations and Challenges of AI Detection

Understanding what AI detectors cannot do is as important as knowing their capabilities.

Challenge 1: The Arms Race Problem

AI detection faces a fundamental arms race: as detectors improve, so do AI systems and evasion techniques.

Evolving AI Models: Each new language model generation becomes harder to detect. GPT-4 produces text closer to human writing than GPT-3, reducing detector accuracy.

Detection-Aware Training: Future AI systems could train specifically to evade detectors, learning patterns that bypass current detection methods.

Adversarial Techniques: Users develop methods to fool detectors, sharing successful evasion strategies online and creating tools automating detection avoidance.

Constant Update Requirement: Detectors must continuously retrain on new AI outputs, creating ongoing development costs and lag time where new AI evades detection.

Challenge 2: No Ground Truth Verification

Unlike plagiarism detection where copied text can be definitively proven through source comparison, AI detection provides only probabilistic assessment without absolute proof.

Uncertainty Problem: Detectors report probability scores (e.g., “85% AI-generated”) but cannot provide definitive answers. Where should the threshold be—80%? 90%? 95%?

Unable to Prove Negative: Detectors cannot definitively prove text was NOT AI-generated, only assign lower probabilities.

Legal and Academic Challenges: Probability scores create evidentiary problems. Is 80% AI probability sufficient to fail a student? To reject a manuscript? To nullify a contract?

Appeals and Disputes: Students or writers accused based on detector scores have legitimate basis to challenge results given documented false positive rates.

Challenge 3: Bias and Fairness Concerns

AI detectors exhibit documented biases that disadvantage specific groups:

Non-Native English Speakers: Multiple studies show significantly higher false positive rates for ESL writers. Students whose first language isn’t English face discrimination from detectors associating clear, grammatically correct English with AI generation.

Neurodivergent Writers: Individuals with autism, ADHD, or other conditions that affect writing style may produce patterns detectors flag as AI-generated.

Socioeconomic Factors: Students without access to writing resources may produce more formulaic writing flagged as AI, while privileged students with editorial assistance pass as human-written.

Demographic Bias in Training Data: If detectors train primarily on writing from specific demographic groups, they may perform worse on writing from underrepresented populations.

Ethical Implications: Using AI detectors in high-stakes decisions without accounting for these biases perpetuates existing inequities in education and professional contexts.

Challenge 4: Mixed Authorship

Real-world AI usage rarely involves purely AI or purely human writing:

AI-Assisted Writing: Writers commonly use AI for brainstorming, outlining, or drafting, then heavily edit and personalize the content. Is this AI-generated? Human-written? Both?

Editing AI Content: Starting with AI draft and extensively rewriting creates text that technically originated from AI but contains substantial human input. How should detectors classify this?

Research Assistance: Using AI to gather information, then writing independently in your own words creates human-authored content informed by AI. Should this be flagged?

Citation and Paraphrasing: Incorporating AI-provided facts, statistics, or quotations with proper attribution follows standard research practices. Yet detectors may flag similarity to AI outputs.

Definitional Problem: The lack of clear definitions for “AI-generated” versus “AI-assisted” versus “human-written” creates ambiguity that technical detection alone cannot resolve.

Challenge 5: Transparency and Explainability

AI detectors often function as “black boxes” with limited transparency about detection methods:

Proprietary Algorithms: Companies protect competitive advantage by not revealing detection techniques, preventing independent verification of accuracy claims.

Limited Explanations: Many detectors provide only probability scores without explaining which specific features triggered the AI assessment.

Inability to Contest: Users cannot meaningfully challenge results without understanding what caused the flag.

Trust Requirements: Educational and professional contexts require trusting detector vendors without ability to independently audit methods or verify claims.

Challenge 6: Contextual Limitations

AI detectors analyze only the text itself, missing crucial context:

Writing Process Invisible: Detectors cannot see how content was created—multiple drafts, outlining, research, editing—only the final output.

Author Intent Unknown: Cannot determine if AI use was intentional (plagiarism/cheating) or incidental (grammar checking, brainstorming).

Assignment Context Missing: Don’t know whether AI use was permitted, encouraged, or prohibited for specific assignments.

Individual Baseline Absent: Cannot compare current submission against writer’s typical style and ability level.

Overreliance Risk: Treating detector scores as definitive without considering broader context leads to unjust outcomes.

How to Use AI Detectors Responsibly

Given limitations and challenges, responsible AI detector use requires careful implementation and interpretation.

Best Practices for Educators

1. Use Detection as Screening, Not Proof: Treat AI detectors as preliminary screening tools that identify submissions warranting closer examination, not as definitive evidence of academic dishonesty.

2. Combine Multiple Evidence Sources: Consider detector results alongside writing quality comparison with known student work, unusual stylistic changes, topic knowledge assessment in discussions, and the student’s explanation of their process.

3. Establish Clear Policies: Communicate AI use policies explicitly before assignments, specify what constitutes acceptable AI assistance, explain consequences of violations, and describe how detection tools will be used in evaluation.

4. Provide Educational Context: Teach students about AI capabilities and limitations, explain why academic integrity matters, discuss ethical AI use in academic and professional contexts, and demonstrate proper AI assistance attribution.

5. Create AI-Resistant Assignments: Design assessments less vulnerable to AI generation through personalized topics requiring specific experience reflection, multi-stage submissions showing process development, in-class components demonstrating knowledge, and oral presentations requiring spontaneous response.

6. Consider Individual Circumstances: Account for student language backgrounds, recognize writing style variation factors, allow opportunities to explain process, and avoid knee-jerk assumptions based solely on detection scores.

Best Practices for Content Creators and Publishers

1. Establish Clear Content Standards: Define acceptable levels of AI assistance, specify disclosure requirements for AI use, document verification processes, and communicate standards to writers.

2. Implement Multi-Layer Verification: Use AI detectors as first-pass screening, conduct editorial review by humans, verify factual claims and citations, and assess content quality beyond authenticity.

3. Focus on Value and Quality: Prioritize content usefulness to audience, evaluate originality of insights and perspective, assess depth beyond surface-level information, and judge overall writing quality.

4. Be Transparent with Audiences: Disclose AI detection practices where appropriate, explain content verification standards, maintain editorial credibility through authentic processes, and acknowledge AI use honestly where present.

Best Practices for Individuals Using AI

1. Understand Your Context Requirements: Know policies in your academic or professional context, clarify acceptable AI assistance levels, document your process when using AI tools, and attribute AI assistance appropriately.

2. Use AI Thoughtfully: Employ AI for ideation, research, and outlining, maintain significant personal input and editing, ensure final work reflects your thinking, and add unique insights beyond AI capability.

3. Expect Detection Possibilities: Assume AI assistance may be detected, prepare to explain your process honestly, keep documentation of your workflow, and maintain integrity in your authorship claims.

4. Focus on Learning and Growth: Use AI to enhance rather than replace skill development, build genuine expertise in your field, develop authentic voice and perspective, and create work you can proudly claim as your own.

The Future of AI Detection

AI detection technology continues evolving to address current limitations and adapt to advancing AI systems.

Emerging Detection Technologies

Behavioral Analysis: Future systems may monitor writing behavior—typing patterns, revision history, time spent—to verify human authorship beyond text analysis alone.

Blockchain Verification: Distributed ledger systems could create tamper-proof records of content creation process, establishing provenance and authenticity.

Cryptographic Watermarking: Mandatory watermarking in AI systems would enable definitive detection, though implementation faces technical and policy challenges.

Multimodal Detection: Analyzing content across text, images, and other media simultaneously may provide more robust detection of AI generation.

Policy and Standardization Efforts

Detection Accuracy Standards: Industry groups and educational organizations work on establishing minimum accuracy requirements for detection tools used in high-stakes decisions.

Transparency Requirements: Regulatory pressure may mandate disclosure of detection methodologies, accuracy rates, and bias testing results.

AI Disclosure Mandates: Some jurisdictions consider requiring AI systems to identify their outputs, simplifying detection through self-disclosure.

Ethical Guidelines: Professional organizations develop frameworks for responsible AI detection implementation balancing verification needs with fairness and privacy.

Education and Adaptation

AI Literacy: Schools increasingly teach students about AI capabilities, limitations, ethical use, and academic integrity in the AI era.

Assignment Redesign: Educators move toward assessment methods emphasizing skills AI cannot replicate—creative synthesis, critical analysis, personal reflection, and novel problem-solving.

Process-Oriented Evaluation: Shifting from product assessment to process evaluation—portfolios, drafts, presentations—demonstrates learning beyond final deliverable quality.

Embracing AI Assistance: Rather than prohibiting AI use entirely, many contexts establish frameworks for transparent, ethical AI assistance that enhances rather than replaces human capability.

Frequently Asked Questions About AI Detectors

How accurate are AI detectors?

AI detector accuracy varies significantly by tool, content type, and context. Leading detectors claim 95-99% accuracy, but independent testing shows real-world accuracy typically ranges from 60-85%. Accuracy is higher for long-form, unedited AI content and lower for short texts, edited outputs, or newer AI models. False positive rates (incorrectly flagging human writing as AI) range from 5-20%, creating significant concerns for high-stakes applications.

Can AI detectors detect ChatGPT and GPT-4?

Most modern AI detectors can detect ChatGPT (GPT-3.5) with moderate-to-high accuracy (70-90%). GPT-4 detection is more challenging, with accuracy dropping to 60-80% as GPT-4 produces more human-like text. Detection accuracy depends on text length, editing, and prompting techniques. Heavily edited GPT-4 content or strategic prompting can evade many current detectors.

Why do AI detectors flag my human-written content?

AI detectors produce false positives for several reasons: non-native English speakers often write in predictable, grammatically correct patterns detectors associate with AI; formal or technical writing naturally shows characteristics similar to AI output; well-edited content loses natural variation detectors expect in human writing; and formulaic writing following templates resembles AI patterns. These false positives represent significant ethical concerns, especially in academic contexts.

How do you bypass AI detectors?

While this guide focuses on detection rather than evasion, common bypass techniques include: substantial human editing adding personal style and variation, paraphrasing AI outputs through rewriting tools, mixing AI and human-written content, using strategic prompts requesting human-like characteristics, and employing newer AI models detectors haven’t trained on. However, ethical considerations around AI use and academic integrity should guide decisions beyond mere technical capability.

Are AI detectors reliable for academic decisions?

AI detectors should not serve as sole basis for academic integrity decisions. High false positive rates, documented biases against non-native English speakers, inability to prove authorship definitively, and lack of process visibility all argue against treating detector scores as conclusive evidence. Responsible academic use employs detectors as screening tools combined with additional evidence, student dialogue, and contextual consideration rather than automatic adjudication.

What is the best AI detector?

No single “best” AI detector exists—optimal choice depends on specific needs. GPTZero offers strong academic focus and free educator access; Originality.AI provides high accuracy for content marketing applications; Turnitin delivers institutional credibility and integrated plagiarism checking; Winston AI serves enterprise needs with customization and multi-language support. Consider accuracy requirements, budget, use case specifics, and integration needs when selecting detection tools.

Can AI detectors see my writing process?

No, AI detectors analyze only the final text submitted, not the creation process. They cannot see drafts, revision history, research conducted, outlines created, or time spent writing. This limitation means detectors miss crucial context about how content was developed, potentially misidentifying legitimate human writing or failing to detect heavily edited AI content. Future systems may incorporate behavioral analysis, but current tools examine text characteristics only.

Do grammar checkers trigger AI detectors?

Using grammar and spell-checking tools like Grammarly, Microsoft Editor, or Google Docs corrections typically does not trigger AI detectors, as these tools make limited corrections without substantially rewriting content. However, extensive use of AI-powered rewriting features, sentence restructuring, or style improvements might introduce patterns detectors flag. Basic proofreading tools remain safe; advanced AI rewriting requires more caution.

Conclusion: Navigating the AI Detection Landscape

AI detectors represent an evolving attempt to maintain content authenticity in an era where artificial intelligence generates human-quality text effortlessly. Understanding how these systems work—analyzing perplexity, burstiness, statistical patterns, and trained machine learning signals—reveals both impressive capabilities and significant limitations. No detector operates with perfect accuracy, false positives harm innocent individuals, biases disadvantage certain groups, and the fundamental arms race between detection and evasion continues escalating.

The technical mechanisms behind AI detection—from perplexity measurement to machine learning classifiers—provide probabilistic assessments rather than definitive proof. These systems excel at identifying unedited, long-form AI content but struggle with edited outputs, sophisticated prompting, newer models, and mixed authorship scenarios increasingly common in real-world AI usage. The 60-85% real-world accuracy across leading detectors, combined with documented false positive rates, demands cautious interpretation of detection results.

Responsible use of AI detection technology requires acknowledging limitations explicitly. Educators cannot treat detector scores as conclusive evidence of academic dishonesty without additional investigation. Publishers need verification processes extending beyond automated detection. Individuals must understand that legitimate human writing sometimes triggers false flags, particularly for non-native English speakers and formal writing contexts. The ethical implications of detection errors—falsely accused students, rejected content creators, misidentified professional work—underscore the need for careful, context-aware implementation.