Generative AI 2.0: The Next Era of Intelligent Creation

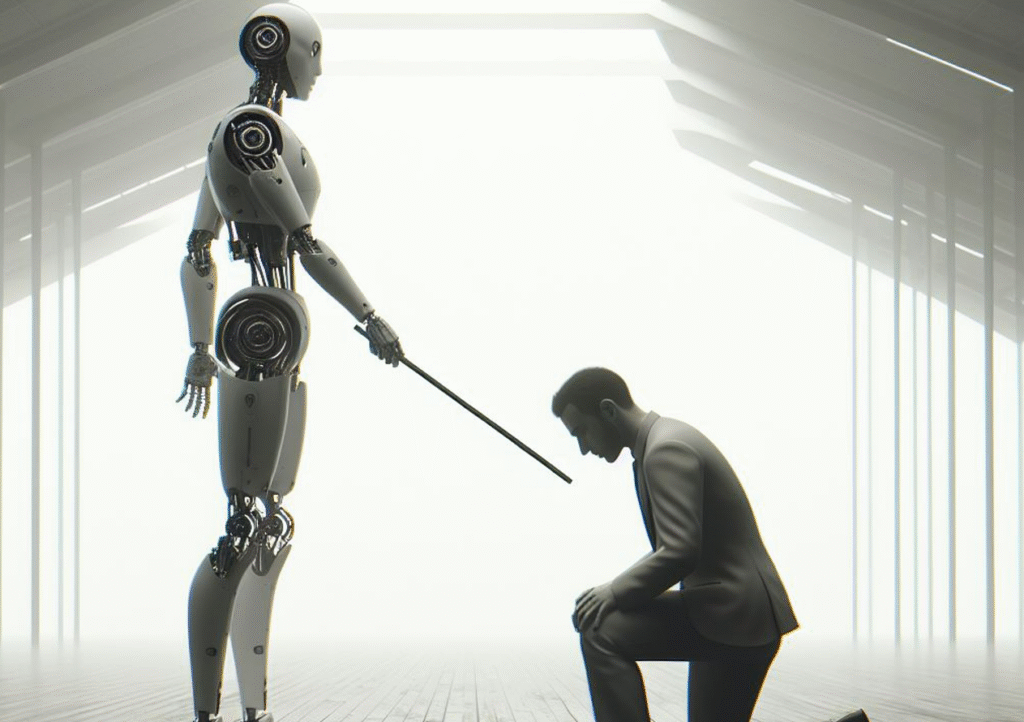

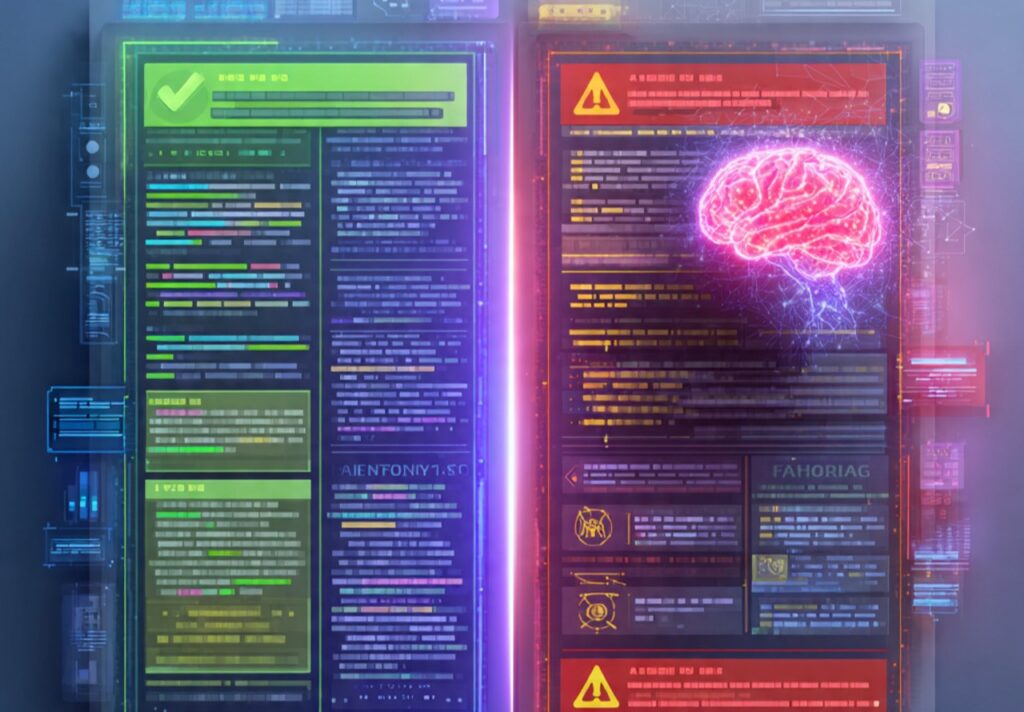

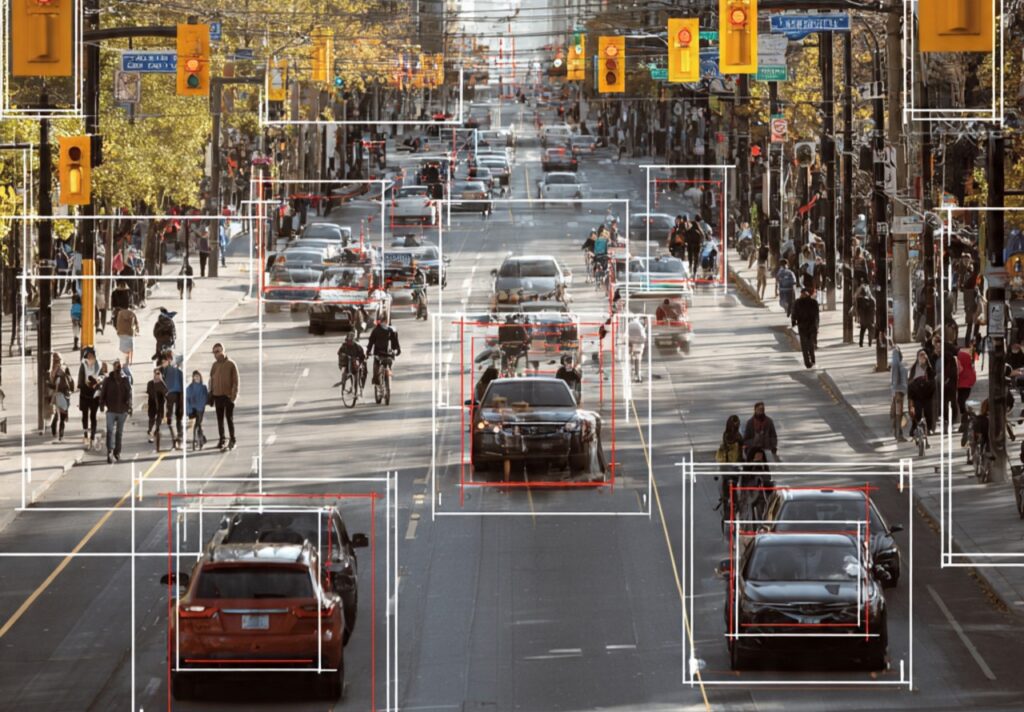

Generative AI 2.0: The Next Era of Intelligent Creation Generative AI has already changed the way we write, design, code, and create. In just a few years, tools that once felt experimental have become part of everyday professional work. Businesses now use AI to draft content, students use it to learn faster, and creators use it to scale their output in ways that were impossible before. However, what we have experienced so far is only the beginning. A new phase is emerging, often called Generative AI 2.0, and it represents something much bigger than the first wave. This next stage is not simply about generating content quickly. It is about intelligence, systems, reasoning, multimodal understanding, and real-world execution. Generative AI 1.0 was mainly focused on producing outputs. It could write a blog post, generate an image, or assist with basic automation. But Generative AI 2.0 goes far beyond that. It is about AI becoming more capable, more contextual, and more action-oriented. Instead of being a content machine, it is becoming a true collaborator in modern work. In this article, you will understand what Generative AI 2.0 really means, how it works, what is changing, and how professionals and businesses can use it strategically instead of simply following trends. What Is Generative AI 2.0? Generative AI 2.0 refers to the next evolution of generative models where AI moves beyond simple content creation and becomes something closer to an intelligence layer for modern work. Instead of only producing outputs like text or images, these systems can understand complex instructions, connect information across different formats, reason through tasks step by step, and support complete workflows rather than isolated answers. Generative AI 2.0 is designed not just to respond, but to assist. It can act more like an intelligent partner that understands context, remembers goals, adapts over time, and helps users complete meaningful tasks. In other words, it is no longer just a creativity tool. It is becoming a full system of intelligence that supports decision-making, productivity, innovation, and execution. Why Generative AI 2.0 Is a Major Shift This shift matters because the role of AI is fundamentally changing. Previously, most people used generative AI for speed. It helped them write faster, brainstorm quicker, or produce designs more efficiently. That alone was valuable, but it was still limited. AI was mainly treated as a shortcut for content production. Now, businesses are using Generative AI 2.0 for deeper work. It is being applied in strategy, operations, research, product development, customer experience, and automation. Instead of generating content for humans to use manually, AI is increasingly becoming part of the workflow itself. The transition is moving from content creation to content intelligence, from single outputs to full workflow support, and from simple prompting to real collaboration. This is why companies that treat AI as a toy or trend will fall behind, while those who build systems around it will lead the next era. How Generative AI 2.0 Works (In Simple Depth) To understand Generative AI 2.0 properly, it helps to look at what has improved behind the scenes. The reason this new phase feels more powerful is because the models are not only larger, but smarter in how they process meaning, context, and tasks. More Powerful Foundation Models Generative AI 2.0 is built on advanced foundation models trained on massive datasets. These models learn language patterns, reasoning structures, and contextual relationships far better than earlier systems. They are not simply predicting the next word in a sentence. They are predicting intent, meaning, structure, and relevance. That is why the outputs feel more coherent, more human-like, and more intelligent. This improvement allows AI to handle more complex instructions, longer conversations, and deeper professional tasks. Multimodal Intelligence One of the biggest upgrades in Generative AI 2.0 is multimodality. Earlier AI systems worked mostly with text. Now, AI can process and generate across multiple formats at once, including text, images, audio, video, documents, charts, and structured data. For example, a Generative AI 2.0 system can read a business report, interpret the graphs, understand written feedback, and summarize insights in natural language. It can even generate presentations or recommendations based on that information. This unlocks an entirely new level of usefulness because the real world is not made of text alone. Modern work is multimodal, and AI is becoming multimodal too. Agent-Based AI Systems Generative AI 2.0 also introduces the rise of AI agents. Instead of answering one question at a time, AI agents can break down tasks into steps, use tools, perform actions, and complete workflows automatically. For example, an AI agent could research competitors, identify market gaps, draft a strategy, create marketing copy, and build a campaign plan—all as part of one connected process. This represents a shift from chatbot to digital worker. AI is moving closer to execution, not just conversation. Context Awareness and Memory Earlier AI systems often forgot everything after one prompt. They lacked continuity, personalization, and long-term context. Generative AI 2.0 systems are becoming more context-aware. They can remember goals, preferences, project details, and ongoing workflows. This makes AI more consistent, more personalized, and more useful over time. Instead of starting from scratch every time, users can build ongoing collaboration with AI as part of their work system. Real Applications of Generative AI 2.0 That Matter To truly understand the value of Generative AI 2.0, we need to move beyond generic examples and focus on real practical impact. Business Strategy and Market Intelligence Generative AI 2.0 can scan thousands of documents, competitor reports, customer reviews, and market feedback to extract insights quickly. Instead of spending weeks doing manual research, teams can identify trends, uncover customer pain points, and make faster strategic decisions. A powerful technique is to use AI to summarize market problems first, then validate those insights through real customer interviews. This combination of AI speed and human confirmation creates smarter strategy. Product Development and Rapid Prototyping AI is now accelerating innovation by helping teams generate feature ideas, draft user stories, simulate

Generative AI 2.0: The Next Era of Intelligent Creation Read More »