Why LLMs Hallucinate: Understanding AI Hallucinations and How They Happen Internally

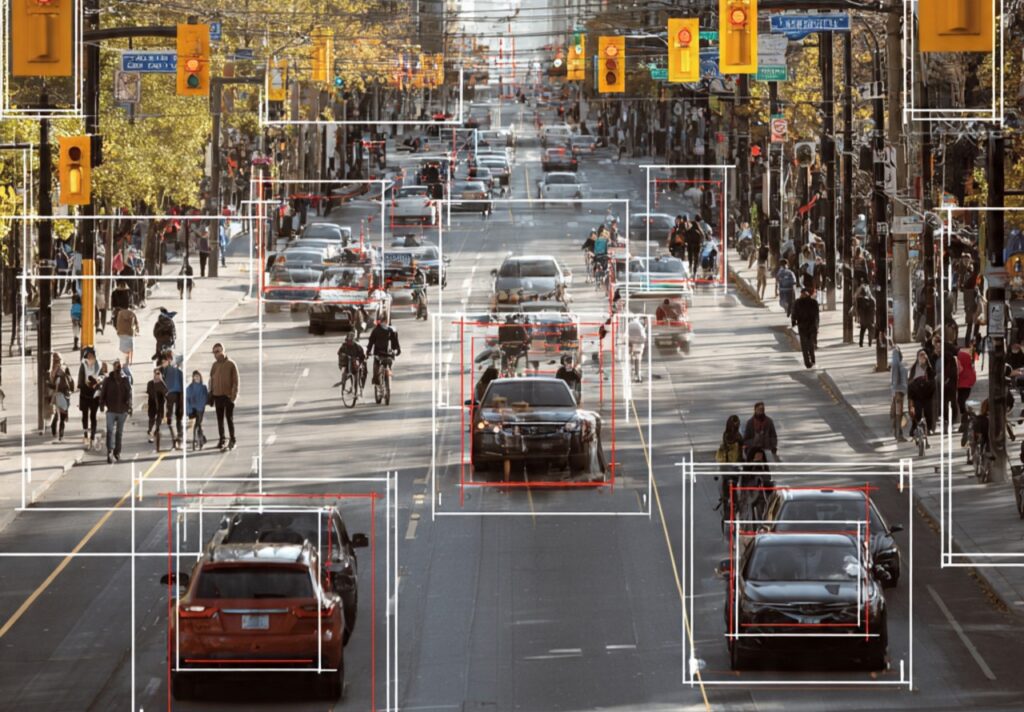

Why LLMs Hallucinate: Understanding AI Hallucinations and How They Happen Internally The Critical Problem of LLM Hallucination Large Language Models (LLMs) like GPT-4, Claude, and Gemini have revolutionized how we interact with artificial intelligence, generating human-like text that assists with writing, coding, research, and decision-making. Yet these powerful systems share a troubling weakness: LLM hallucination—the confident generation of false, fabricated, or nonsensical information presented as fact. Understanding LLM hallucination isn’t just an academic curiosity—it’s essential for anyone relying on AI systems for important tasks. When a medical professional consults an LLM about treatment options, when a lawyer uses AI for legal research, or when a business makes decisions based on AI-generated analysis, hallucinations can have serious consequences. A recent study found that even advanced models hallucinate in 3-27% of responses depending on the task, making this a critical reliability issue. What is LLM Hallucination? Defining the Problem LLM hallucination occurs when language models generate content that appears plausible and is presented confidently but is factually incorrect, nonsensical, or unfaithful to provided source material. Unlike human hallucinations involving sensory perception, LLM hallucination refers specifically to the generation of false information in text form. Types of LLM Hallucination Factual Hallucination: The model invents facts, statistics, dates, or events that never occurred. Example: claiming a historical event happened in 1987 when it actually occurred in 1995, or citing research papers that don’t exist. Intrinsic Hallucination: Generated content contradicts information explicitly provided in the prompt or context. If you provide a document stating “Revenue increased 15%” and the model responds “Revenue decreased,” that’s intrinsic hallucination. Extrinsic Hallucination: The model adds information not present in source material, going beyond what can be verified. While sometimes helpful, extrinsic hallucination becomes problematic when presented as fact rather than inference. Fabricated Citations: LLM hallucination frequently manifests as invented references—the model generates realistic-looking citations to papers, books, or sources that don’t exist, often with convincing titles, authors, and publication details. Confabulation: The model fills knowledge gaps with plausible-sounding but invented details rather than acknowledging uncertainty. This represents perhaps the most dangerous form of LLM hallucination because the output appears coherent and authoritative. Why LLM Hallucination Matters The consequences of LLM hallucination extend beyond mere inconvenience: Medical Context: Hallucinated drug interactions or dosage information could endanger patient safety. Healthcare professionals must verify all AI-generated medical information against authoritative sources. Legal Applications: Lawyers relying on hallucinated case citations face professional sanctions. Several documented cases involve attorneys submitting briefs containing fabricated legal precedents generated by LLMs. Financial Decisions: Business leaders making strategic decisions based on hallucinated market data or financial projections risk significant financial losses and reputational damage. Academic Integrity: Students and researchers citing hallucinated sources undermine scholarly work and face academic consequences when fabricated references are discovered. Technical Development: Developers implementing hallucinated code snippets or following fabricated technical documentation waste time debugging non-functional solutions and may introduce security vulnerabilities. The Architecture Behind LLM Hallucination: How Language Models Work Understanding why LLM hallucination occurs requires grasping how these models function internally. Large Language Models don’t “know” facts or “understand” truth—they predict likely text continuations based on statistical patterns learned from training data. Transformer Architecture Fundamentals Modern LLMs build upon the Transformer architecture, introduced in 2017. This neural network design processes text through multiple layers of attention mechanisms, enabling the model to consider relationships between words across long sequences. Key Components: Embedding Layer: Converts words into high-dimensional numerical vectors, mapping semantic relationships (words with similar meanings cluster in vector space). Attention Mechanism: Allows the model to weigh which previous words are most relevant when predicting the next word. The phrase “bank” receives different attention weights in “river bank” versus “savings bank.” Feed-Forward Networks: Process attended information through learned transformations, capturing complex patterns beyond simple word associations. Output Layer: Produces probability distribution over vocabulary—for each position, the model assigns probabilities to thousands of possible next words. This architecture creates powerful pattern recognition but lacks mechanisms for truth verification, setting the stage for LLM hallucination. Training Process and Knowledge Acquisition LLMs undergo training on massive text corpora—billions or trillions of words from books, websites, research papers, and online discussions. During this process, the model adjusts billions of parameters (GPT-3 has 175 billion, GPT-4 rumored to have over a trillion) to minimize prediction errors. What Actually Happens: The model learns statistical associations: “Paris” frequently appears near “France” and “capital,” so it learns these correlations. It discovers that sentences about “photosynthesis” often mention “chlorophyll” and “plants.” These patterns enable impressive text generation but don’t constitute genuine understanding or factual knowledge storage. Critical Limitation: The model has no database of facts, no verification mechanism, no connection to reality beyond training text. When generating responses, it samples from learned probability distributions without checking factual accuracy. This fundamental design enables LLM hallucination—the model generates what’s statistically likely, not what’s factually true. Next-Token Prediction: The Core Mechanism At every generation step, the LLM performs next-token prediction: given previous text, predict the most likely next word (or subword token). This seemingly simple mechanism, repeated thousands of times, produces coherent text but also enables hallucination. Generation Process: Where LLM Hallucination Emerges: The model never asks “Is this true?” It only asks “Is this statistically plausible given my training data?” When faced with knowledge gaps, rather than admitting uncertainty, the model continues generating plausible-sounding text by following learned patterns. This produces confident hallucinations. Internal Mechanisms: Why LLM Hallucination Happens Multiple technical factors converge to create LLM hallucination. Understanding these mechanisms reveals why eliminating hallucinations entirely remains an unsolved challenge in AI research. Mechanism 1: Training Data Limitations and Biases Knowledge Cutoff: LLMs freeze knowledge at training completion. GPT-4’s training data ends in April 2023—the model cannot know events afterward and may hallucinate when asked about recent developments, generating plausible-sounding but invented information about post-cutoff events. Data Quality Issues: Training corpora contain misinformation, contradictions, and errors. The model learns from both accurate and inaccurate sources without distinguishing between them. When multiple conflicting “facts” exist in training data, the model may blend them, creating hallucinations.

Why LLMs Hallucinate: Understanding AI Hallucinations and How They Happen Internally Read More »