Large Language Models and Their Expanding Role

Large Language Models, often shortened to LLMs, are not just another step in artificial intelligence. They represent a structural change in how machines understand, generate, and interact with human language. Unlike earlier AI systems that followed rigid rules or narrow training data, LLMs operate on probability, context, and scale. Because of this, they are now influencing software development, customer support, research, education, marketing, and decision-making itself.

However, to use them effectively, it is necessary to understand how they work internally, why they appear intelligent, and where their limits actually are. Without that understanding, organizations risk misusing them, overtrusting them, or deploying them in ways that create more problems than value.

What Exactly Is a Large Language Model?

A Large Language Model is a machine learning system trained on massive amounts of text data to predict and generate language. At its core, it does not “know” facts or meanings in the human sense. Instead, it learns statistical relationships between words, phrases, sentences, and larger patterns of language.

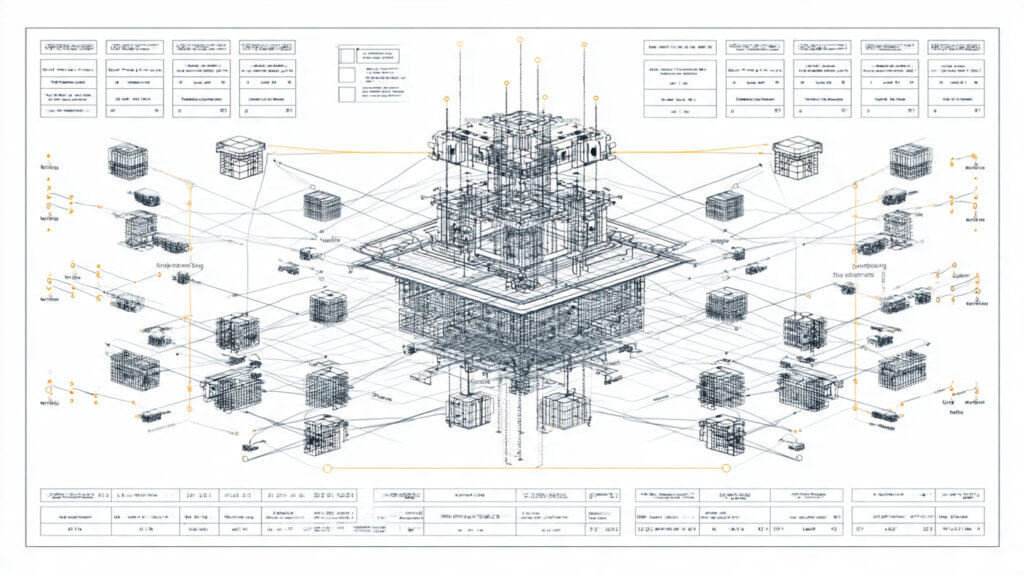

What makes an LLM “large” is not just file size. It refers to three things working together:

- The number of parameters, often in the billions or trillions

- The scale of training data, covering diverse domains and writing styles

- The computational infrastructure used during training

Because of this scale, LLMs can generalize across tasks. A single model can write code, explain medical concepts, summarize legal documents, and hold conversations without being explicitly programmed for each task.

How Large Language Models Actually Work (Step by Step)

To understand why LLMs are powerful, you must understand their internal workflow.

Tokenization: Breaking Language Into Units

Before training even begins, text is converted into tokens. Tokens are not always words. Sometimes they are word fragments, punctuation marks, or symbols. This allows the model to handle unfamiliar words and multiple languages efficiently.

Each token is mapped to a numerical representation. From that point onward, the model operates entirely in numbers, not words.

The Transformer Architecture

Modern LLMs are built using a structure called a transformer. This architecture is responsible for their ability to handle long context and complex relationships.

The key component of transformers is self-attention. Self-attention allows the model to evaluate how much importance each token has relative to every other token in a sequence. This is why LLMs can understand context, references, and dependencies across long passages of text.

For example, when processing a paragraph, the model does not read left to right like a human. Instead, it evaluates the entire sequence simultaneously, assigning attention weights based on relevance.

Training Through Prediction

LLMs are trained using a simple but powerful objective:

predict the next token given the previous tokens.

They do this millions or billions of times during training. When the model makes a wrong prediction, its internal parameters are adjusted slightly. Over time, this process shapes the model into something that captures grammar, logic patterns, stylistic conventions, and domain knowledge.

Importantly, the model is not memorizing text. It is learning probability distributions over language.

Why LLMs Appear Intelligent

LLMs feel intelligent because human language encodes human reasoning. When a model learns language patterns at scale, it indirectly learns patterns of reasoning, explanation, and problem-solving.

However, this intelligence is emergent, not intentional. The model does not reason in the human sense. It generates outputs that statistically resemble reasoning because those patterns exist in the data it was trained on.

Why Large Language Models Work So Well

LLMs succeed where earlier AI failed for several reasons.

First, scale matters. Smaller models could only capture shallow patterns. Large models capture abstract relationships, analogies, and multi-step structures.

Second, transformers handle context far better than older architectures. This allows LLMs to maintain coherence over long outputs.

Third, modern training pipelines include fine-tuning, reinforcement learning, and human feedback. These processes shape raw language ability into something usable and safer for real-world interaction.

Finally, language itself is a universal interface. Most human knowledge is encoded in text. By mastering text, LLMs gain access to a wide spectrum of human activity.

Core Capabilities of Large Language Models

Language Understanding at Scale

LLMs can interpret intent, tone, ambiguity, and context. This allows them to summarize documents, extract insights, and answer questions across domains.

Language Generation With Structure

They can generate structured outputs such as reports, code, outlines, and documentation. This makes them useful not only for creativity but also for operational work.

Few-Shot and Zero-Shot Learning

LLMs can perform tasks they were not explicitly trained for by following instructions or examples. This drastically reduces development time for AI-powered applications.

Where Large Language Models Are Being Used Today

Software Development

LLMs assist developers by generating code, explaining errors, refactoring legacy systems, and writing documentation. This reduces cognitive load and speeds up development cycles.

Customer Support and Operations

Instead of static chatbots, LLMs can handle nuanced customer queries, escalate complex issues, and integrate with internal knowledge bases.

Research and Knowledge Work

Researchers use LLMs to scan literature, summarize findings, generate hypotheses, and explore alternative interpretations.

Marketing and Content Systems

LLMs help create drafts, personalize messaging, analyze audience sentiment, and scale content production without sacrificing consistency.

Limitations and Risks of Large Language Models

Despite their power, LLMs have critical limitations.

They do not verify facts. They generate plausible text, not guaranteed truth. This leads to hallucinations, especially in specialized or rapidly changing domains.

They reflect biases present in training data. Without safeguards, these biases can surface in outputs.

They lack true understanding. An LLM does not have goals, beliefs, or awareness. Overtrusting outputs without human oversight can cause serious errors.

They are sensitive to prompt design. Poorly framed prompts lead to poor results, even with advanced models.

Why Human Oversight Is Still Essential

LLMs are best understood as cognitive amplifiers, not replacements for humans. They excel at generating options, summarizing complexity, and accelerating workflows. Humans are still required to set goals, evaluate consequences, and make final decisions.

Organizations that succeed with LLMs design systems where humans remain in control, using AI as an assistant rather than an authority.

The Expanding Role of LLMs in the Future

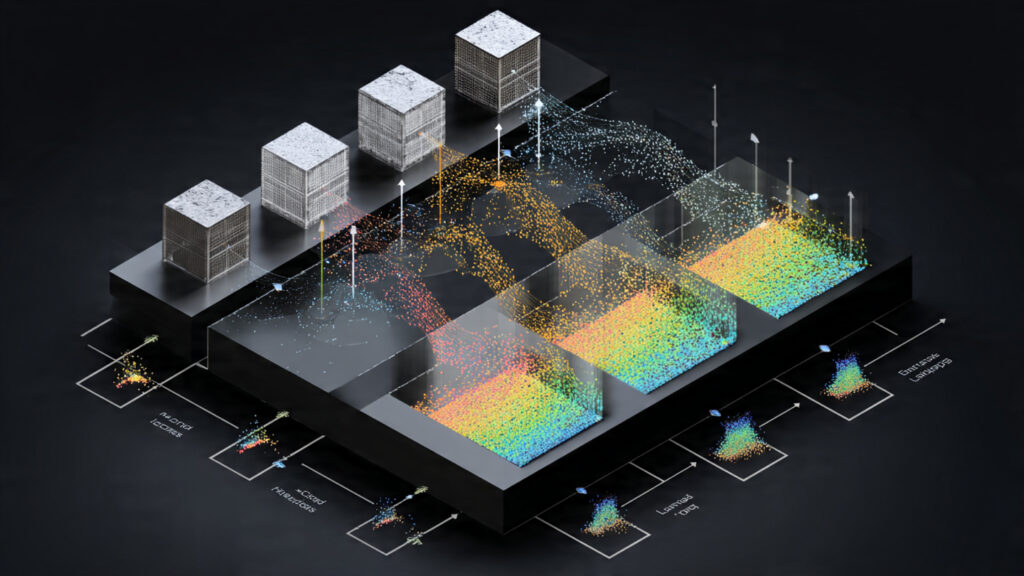

The role of LLMs is expanding beyond standalone tools.

They are being integrated into operating systems, enterprise software, analytics platforms, and decision-support systems. Over time, LLMs will act as interfaces between humans and complex digital infrastructure.

Instead of learning dozens of tools, users will communicate intent through language, and systems will execute actions accordingly.

Practical Strategies for Using Large Language Models Effectively

- Use LLMs for drafting and ideation, not final authority

- Combine them with verified data sources

- Implement human-in-the-loop workflows

- Train teams on prompt design and evaluation

- Treat outputs as hypotheses, not conclusions

Final Thoughts

Large Language Models are not magic, and they are not dangerous by default. They are powerful tools shaped by how they are designed, trained, and deployed. Organizations and individuals who understand how they work gain a real advantage. Those who treat them as black boxes risk confusion, misuse, and disappointment.

The expanding role of LLMs is not about replacing human intelligence. It is about reshaping how intelligence is supported, scaled, and applied in a world overwhelmed by information.