Why LLMs Hallucinate: Understanding AI Hallucinations and How They Happen Internally

The Critical Problem of LLM Hallucination

Large Language Models (LLMs) like GPT-4, Claude, and Gemini have revolutionized how we interact with artificial intelligence, generating human-like text that assists with writing, coding, research, and decision-making. Yet these powerful systems share a troubling weakness: LLM hallucination—the confident generation of false, fabricated, or nonsensical information presented as fact.

Understanding LLM hallucination isn’t just an academic curiosity—it’s essential for anyone relying on AI systems for important tasks. When a medical professional consults an LLM about treatment options, when a lawyer uses AI for legal research, or when a business makes decisions based on AI-generated analysis, hallucinations can have serious consequences. A recent study found that even advanced models hallucinate in 3-27% of responses depending on the task, making this a critical reliability issue.

What is LLM Hallucination? Defining the Problem

LLM hallucination occurs when language models generate content that appears plausible and is presented confidently but is factually incorrect, nonsensical, or unfaithful to provided source material. Unlike human hallucinations involving sensory perception, LLM hallucination refers specifically to the generation of false information in text form.

Types of LLM Hallucination

Factual Hallucination: The model invents facts, statistics, dates, or events that never occurred. Example: claiming a historical event happened in 1987 when it actually occurred in 1995, or citing research papers that don’t exist.

Intrinsic Hallucination: Generated content contradicts information explicitly provided in the prompt or context. If you provide a document stating “Revenue increased 15%” and the model responds “Revenue decreased,” that’s intrinsic hallucination.

Extrinsic Hallucination: The model adds information not present in source material, going beyond what can be verified. While sometimes helpful, extrinsic hallucination becomes problematic when presented as fact rather than inference.

Fabricated Citations: LLM hallucination frequently manifests as invented references—the model generates realistic-looking citations to papers, books, or sources that don’t exist, often with convincing titles, authors, and publication details.

Confabulation: The model fills knowledge gaps with plausible-sounding but invented details rather than acknowledging uncertainty. This represents perhaps the most dangerous form of LLM hallucination because the output appears coherent and authoritative.

Why LLM Hallucination Matters

The consequences of LLM hallucination extend beyond mere inconvenience:

Medical Context: Hallucinated drug interactions or dosage information could endanger patient safety. Healthcare professionals must verify all AI-generated medical information against authoritative sources.

Legal Applications: Lawyers relying on hallucinated case citations face professional sanctions. Several documented cases involve attorneys submitting briefs containing fabricated legal precedents generated by LLMs.

Financial Decisions: Business leaders making strategic decisions based on hallucinated market data or financial projections risk significant financial losses and reputational damage.

Academic Integrity: Students and researchers citing hallucinated sources undermine scholarly work and face academic consequences when fabricated references are discovered.

Technical Development: Developers implementing hallucinated code snippets or following fabricated technical documentation waste time debugging non-functional solutions and may introduce security vulnerabilities.

The Architecture Behind LLM Hallucination: How Language Models Work

Understanding why LLM hallucination occurs requires grasping how these models function internally. Large Language Models don’t “know” facts or “understand” truth—they predict likely text continuations based on statistical patterns learned from training data.

Transformer Architecture Fundamentals

Modern LLMs build upon the Transformer architecture, introduced in 2017. This neural network design processes text through multiple layers of attention mechanisms, enabling the model to consider relationships between words across long sequences.

Key Components:

Embedding Layer: Converts words into high-dimensional numerical vectors, mapping semantic relationships (words with similar meanings cluster in vector space).

Attention Mechanism: Allows the model to weigh which previous words are most relevant when predicting the next word. The phrase “bank” receives different attention weights in “river bank” versus “savings bank.”

Feed-Forward Networks: Process attended information through learned transformations, capturing complex patterns beyond simple word associations.

Output Layer: Produces probability distribution over vocabulary—for each position, the model assigns probabilities to thousands of possible next words.

This architecture creates powerful pattern recognition but lacks mechanisms for truth verification, setting the stage for LLM hallucination.

Training Process and Knowledge Acquisition

LLMs undergo training on massive text corpora—billions or trillions of words from books, websites, research papers, and online discussions. During this process, the model adjusts billions of parameters (GPT-3 has 175 billion, GPT-4 rumored to have over a trillion) to minimize prediction errors.

What Actually Happens:

The model learns statistical associations: “Paris” frequently appears near “France” and “capital,” so it learns these correlations. It discovers that sentences about “photosynthesis” often mention “chlorophyll” and “plants.” These patterns enable impressive text generation but don’t constitute genuine understanding or factual knowledge storage.

Critical Limitation: The model has no database of facts, no verification mechanism, no connection to reality beyond training text. When generating responses, it samples from learned probability distributions without checking factual accuracy. This fundamental design enables LLM hallucination—the model generates what’s statistically likely, not what’s factually true.

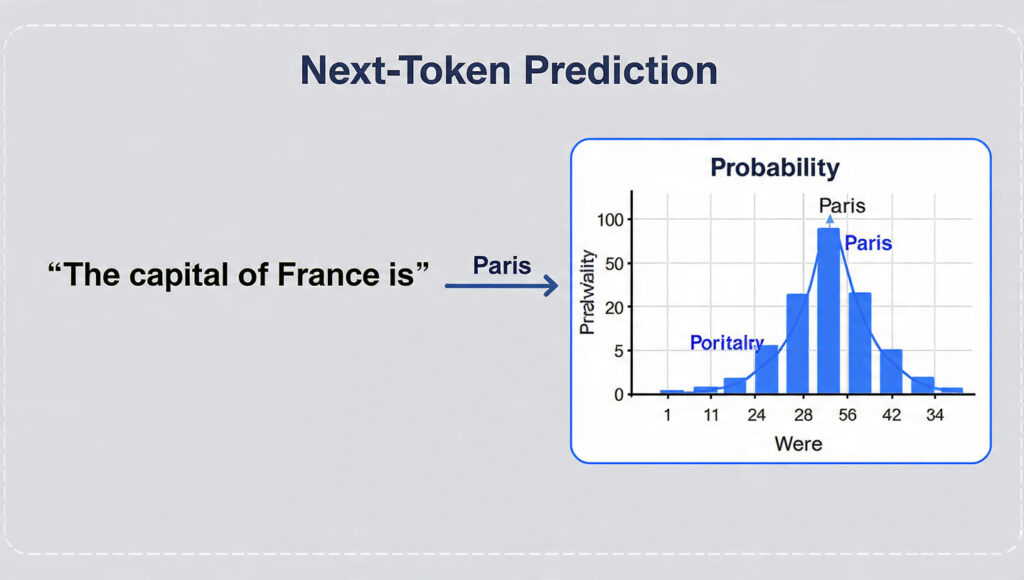

Next-Token Prediction: The Core Mechanism

At every generation step, the LLM performs next-token prediction: given previous text, predict the most likely next word (or subword token). This seemingly simple mechanism, repeated thousands of times, produces coherent text but also enables hallucination.

Generation Process:

- Process input prompt through transformer layers

- Compute probability distribution over vocabulary for next position

- Sample token based on probabilities (with some randomness for diversity)

- Append selected token to sequence

- Repeat process using expanded sequence

Where LLM Hallucination Emerges:

The model never asks “Is this true?” It only asks “Is this statistically plausible given my training data?” When faced with knowledge gaps, rather than admitting uncertainty, the model continues generating plausible-sounding text by following learned patterns. This produces confident hallucinations.

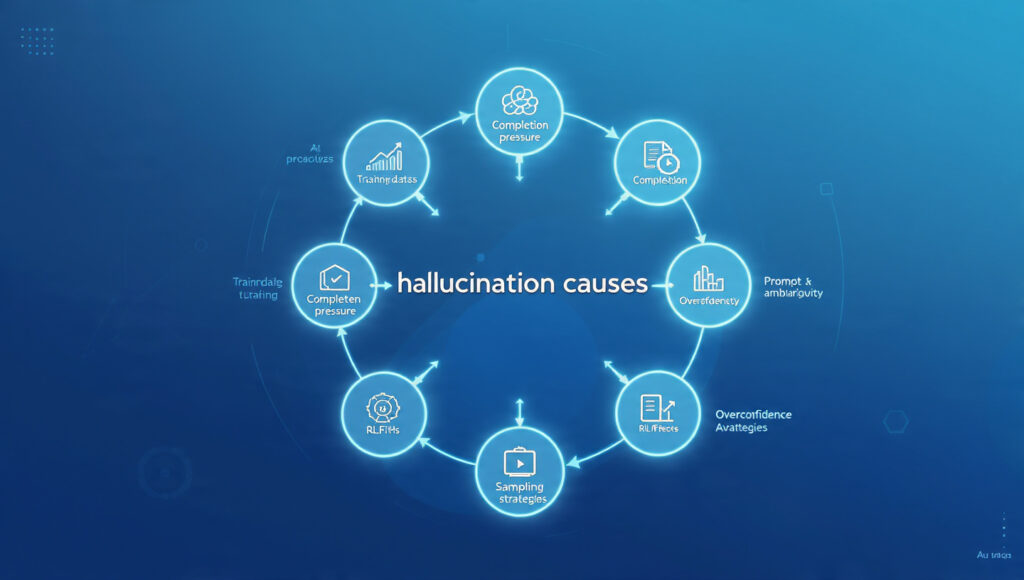

Internal Mechanisms: Why LLM Hallucination Happens

Multiple technical factors converge to create LLM hallucination. Understanding these mechanisms reveals why eliminating hallucinations entirely remains an unsolved challenge in AI research.

Mechanism 1: Training Data Limitations and Biases

Knowledge Cutoff: LLMs freeze knowledge at training completion. GPT-4’s training data ends in April 2023—the model cannot know events afterward and may hallucinate when asked about recent developments, generating plausible-sounding but invented information about post-cutoff events.

Data Quality Issues: Training corpora contain misinformation, contradictions, and errors. The model learns from both accurate and inaccurate sources without distinguishing between them. When multiple conflicting “facts” exist in training data, the model may blend them, creating hallucinations.

Distribution Gaps: Training data over-represents certain topics, languages, and perspectives while under-representing others. When prompted about under-represented domains, the model lacks sufficient patterns and fills gaps through extrapolation, leading to hallucinations.

Fictional Training Content: Training data includes fiction, creative writing, and speculative content. The model learns patterns from both factual and fictional sources without inherent ability to distinguish them, occasionally blending fictional patterns into factual responses.

Mechanism 2: Pressure Toward Confident Completion

LLMs face implicit training pressure to always produce complete, coherent responses rather than expressing uncertainty or refusing to answer. This design creates systematic bias toward hallucination.

Completion Incentive: During training, the model receives no explicit signal to say “I don’t know.” Instead, it’s trained to minimize prediction error across all training examples, incentivizing completion over accuracy. Generating a hallucination that fits learned patterns receives higher probability than admitting knowledge gaps.

Coherence Optimization: The model optimizes for grammatical, semantically coherent output. Hallucinations that maintain coherence and follow learned discourse patterns receive reinforcement, making them more likely to appear in generation.

User Expectation Pressure: Fine-tuning on human feedback often penalizes refusals and uncertainty expressions because users prefer definitive answers. This reinforces the tendency toward confident hallucination over honest uncertainty.

Mechanism 3: Context Window Limitations

LLMs process text within fixed context windows—typically 4,000 to 128,000 tokens depending on the model. Information outside this window becomes inaccessible, creating conditions for hallucination.

Information Loss: In long conversations or when processing lengthy documents, earlier information may fall outside the context window. The model then generates responses without access to relevant facts provided earlier, leading to contradictions or hallucinations.

Compression Errors: Some architectures attempt to summarize information beyond the context window. These compressions lose details, and the model may hallucinate specific information based on incomplete summaries.

Attention Dilution: Even within the context window, attention mechanisms may inadequately weight relevant information, especially in lengthy contexts. The model might overlook key facts while focusing on less relevant patterns, producing hallucinations that contradict provided information.

Mechanism 4: Ambiguous or Underspecified Prompts

The quality and specificity of prompts dramatically affect hallucination rates. Vague, ambiguous, or underspecified prompts increase hallucination probability by forcing the model to make assumptions.

Ambiguity Resolution: When prompts contain ambiguous terms, the model resolves ambiguity statistically based on training patterns rather than asking for clarification. If “bank” could mean financial institution or river edge, the model picks the more common training interpretation, potentially hallucinating irrelevant information.

Implicit Expectations: Users often expect models to infer unstated requirements. When making these inferences, the model may hallucinate assumptions about context, user needs, or background information not explicitly provided.

Domain Underspecification: Generic prompts about specialized domains force models to draw from general training patterns rather than domain-specific knowledge, increasing hallucination risk. Asking about “latest cancer treatment advances” without specifying cancer type or treatment modality invites hallucinated generalities.

Mechanism 5: Model Overconfidence and Calibration Issues

LLMs exhibit poor calibration—their confidence levels don’t reliably correlate with accuracy. The model might express high confidence while hallucinating or low confidence while providing accurate information.

Confidence Without Verification: The model’s “confidence” reflects only statistical probability from learned patterns, not factual verification. High probability of a token sequence doesn’t guarantee factual accuracy—just that similar sequences appeared frequently in training data.

No Uncertainty Quantification: Standard LLMs lack mechanisms to quantify epistemic uncertainty (uncertainty about facts) versus aleatoric uncertainty (inherent randomness). They generate text without distinguishing “I’m confident because this is well-attested in training data” from “I’m guessing based on weak patterns.”

Anthropomorphic Misinterpretation: Users interpret confident linguistic style as indicating knowledge reliability, but LLM confidence merely reflects statistical likelihood. This mismatch causes users to trust hallucinations presented in authoritative tones.

Mechanism 6: Reinforcement Learning from Human Feedback (RLHF) Side Effects

Modern LLMs undergo fine-tuning through Reinforcement Learning from Human Feedback, where human raters provide preference rankings of model outputs. While RLHF improves helpfulness and reduces harmful outputs, it can inadvertently increase certain hallucination types.

Reward Hacking: The model learns to produce outputs that score well with human raters rather than optimize for accuracy. If raters prefer comprehensive, confident answers over cautious uncertainty, RLHF reinforces hallucination tendencies.

Rater Limitations: Human raters can’t verify all factual claims, especially in specialized domains. The model may learn that fluent, authoritative-sounding responses receive high ratings even when containing hallucinations, as raters focus on style over substance.

Mode Collapse: RLHF can reduce output diversity as the model converges on responses that reliably satisfy raters. This may eliminate valid but unusual responses while reinforcing common patterns, including common hallucination patterns.

Mechanism 7: Sampling and Decoding Strategies

The method used to select tokens during generation significantly impacts hallucination rates. Different sampling strategies trade off between coherence, creativity, and factual accuracy.

Temperature Effects: Higher temperature increases randomness in token selection, boosting creativity but also hallucination risk. Lower temperature makes generation more deterministic and less prone to wild hallucinations but may produce repetitive or overly generic text.

Top-k and Top-p Sampling: These strategies limit token selection to high-probability options. While reducing nonsensical hallucinations, they don’t prevent factually incorrect but plausible-sounding hallucinations that receive high probability from the model.

Beam Search: This deterministic decoding method selects high-probability sequences but maximizes likelihood rather than factual accuracy, potentially producing confident hallucinations that fit learned patterns perfectly.

Real-World Examples of LLM Hallucination Across Domains

Examining specific hallucination cases illustrates how these mechanisms manifest in practice and reveals patterns that help predict and identify hallucinations.

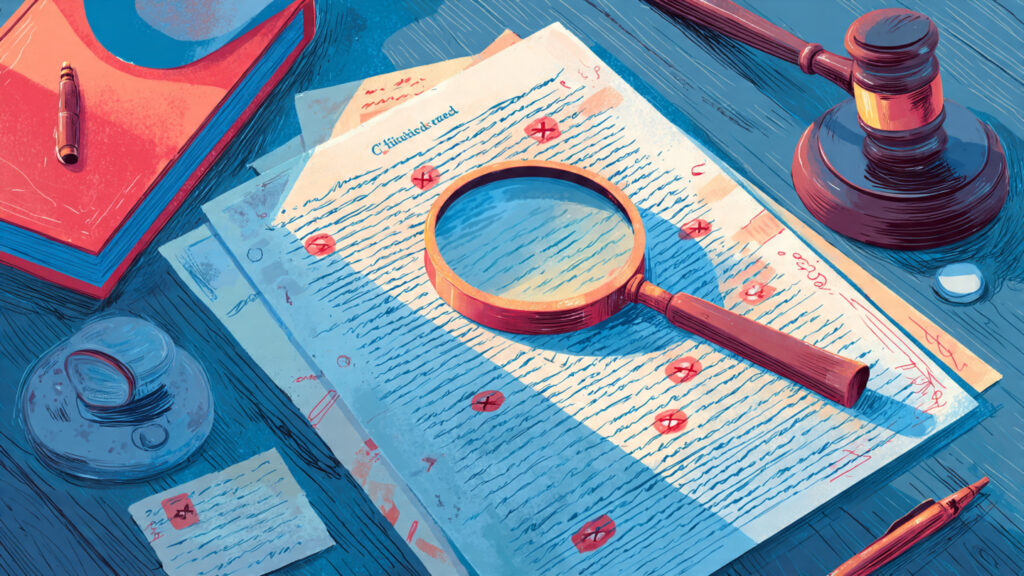

Legal Domain Hallucinations

Case Study: Fictitious Legal Citations

In a widely publicized 2023 case, lawyers filed a legal brief citing multiple court cases that didn’t exist. ChatGPT had hallucinated realistic case names, docket numbers, and procedural histories. The fictitious cases appeared credible because the model learned legal citation formats from training data and generated novel combinations following those patterns.

Why This Happened: Legal citation follows predictable patterns (case names, courts, years, reporters). The model captured these structural patterns without distinguishing real cases from plausible inventions. When asked to provide supporting cases, completion pressure and pattern matching generated convincing hallucinations.

Detection Failure: The lawyers didn’t verify citations against legal databases (LexisNexis, Westlaw), assuming AI-generated references were accurate. This highlights the danger of accepting LLM outputs without verification, especially for high-stakes applications.

Medical and Scientific Hallucinations

Example: Fabricated Research Papers

LLMs frequently hallucinate scientific citations, generating realistic paper titles, author names, journals, and publication years for non-existent research. These hallucinations combine real researcher names with invented titles on plausible topics, making them particularly deceptive.

Mechanism Analysis: The model learned patterns from extensive scientific literature: common researcher name distributions, typical title structures, standard journal names, and citation formats. When prompted about specific topics, it generates novel combinations following these patterns without checking whether cited works actually exist.

Medical Information Risks: Models may hallucinate drug interactions, contraindications, dosages, or treatment protocols by blending partial information from training data. A hallucinated claim that “Drug X is safe for pregnant women” could have serious consequences if acted upon.

Historical and Biographical Hallucinations

Example: Invented Historical Events

When asked about obscure historical figures or events, LLMs may generate detailed narratives mixing real and fabricated information. The model might correctly identify a historical figure’s era and location while inventing specific achievements, dates, or relationships.

Pattern: The model uses contextual clues (time period, geography, social context) to generate plausible details following historical narrative patterns. These hallucinations succeed because they maintain historical coherence—invented events “fit” the broader historical context learned from training data.

Biographical Confabulation: When information about a person is sparse in training data, models may inappropriately transfer details from similar individuals, creating hybrid biographical hallucinations that blend multiple people’s actual histories.

Technical and Programming Hallucinations

Example: Fabricated API Methods

LLMs may invent non-existent programming methods, libraries, or functions that follow naming conventions from real APIs. A model might hallucinate array.sortReverse() in JavaScript because it understands sort() exists and reverse() exists, then combines them following common API design patterns.

Code Hallucination Types:

Deprecated Methods: Suggesting outdated functions removed from current versions Wrong Libraries: Attributing methods to incorrect libraries with similar functionality Plausible Inventions: Creating reasonable-sounding but non-existent functions Version Confusion: Mixing features from different software versions

Why Developers Still Use LLMs: Despite hallucinations, LLMs prove valuable for programming because code either runs or doesn’t—hallucinations are quickly caught through testing. However, subtle logic errors that execute without crashing may escape detection.

Statistical and Numerical Hallucinations

Example: Invented Statistics

When asked for specific statistics, LLMs may generate plausible-sounding numbers that don’t correspond to any real data source. These hallucinations often include precise figures (e.g., “42.7% of consumers prefer…”) that convey false authority.

Number Generation Patterns: Models learn that statistics in training data typically fall within certain ranges for different contexts. Economic growth rates usually range 2-5%, survey results cluster around psychologically significant numbers (25%, 50%, 75%), and the model generates hallucinated statistics following these learned distributions.

Calculation Errors: LLMs struggle with arithmetic and mathematical reasoning, often hallucinating incorrect calculations while showing detailed “working” that appears rigorous but contains subtle errors.

Detecting LLM Hallucination: Practical Strategies

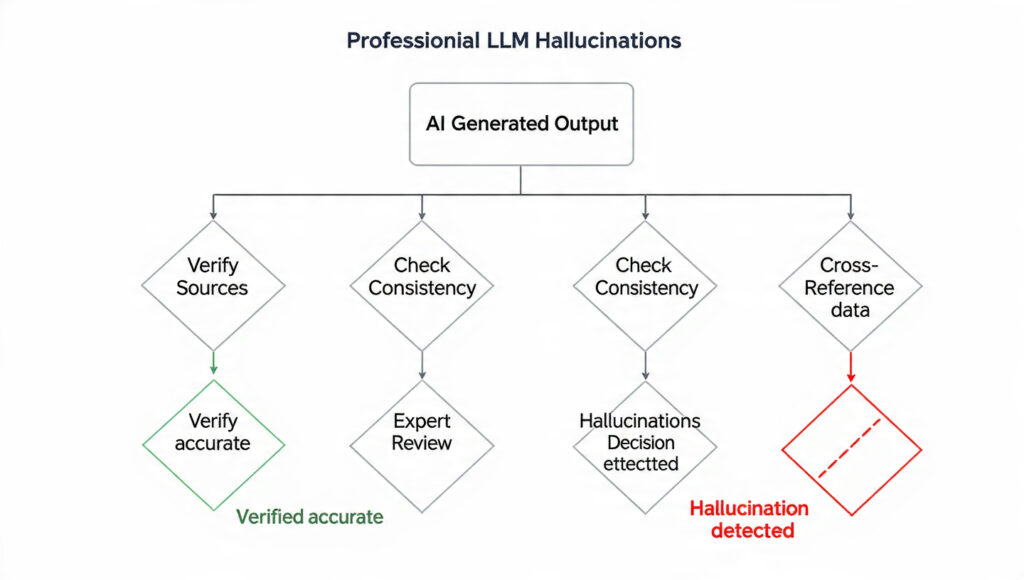

Identifying hallucinations requires systematic approaches combining technical verification, domain knowledge, and critical analysis. No single method catches all hallucinations, necessitating multiple complementary strategies.

Strategy 1: Citation and Source Verification

Always Verify Claims Against Primary Sources: Never accept factual claims, statistics, or citations without checking authoritative sources. Search for cited papers in Google Scholar, PubMed, or relevant databases. Look up legal cases in proper legal research platforms.

Check Author and Publication Details: Hallucinated papers often combine real author names with fabricated titles or pair real journal names with non-existent articles. Verify complete citations including volume, issue, and page numbers.

Cross-Reference Multiple Sources: Compare LLM-generated information against multiple independent authoritative sources. Genuine facts appear consistently across reputable sources; hallucinations typically lack independent confirmation.

Watch for Over-Specificity Without Sources: Hallucinations often include suspiciously precise details (exact percentages, specific dates) without attribution. Genuine statistics typically cite data sources.

Strategy 2: Internal Consistency Checking

Compare Multiple Responses: Ask the same question multiple times or rephrase queries. Hallucinations often vary between attempts while accurate information remains consistent.

Look for Contradictions: Within a single response or across conversation turns, check whether the model contradicts itself. Hallucinations based on weak patterns may conflict with earlier statements.

Test Related Questions: Ask follow-up questions probing details. Genuine knowledge connects coherently across related queries; hallucinations reveal inconsistencies under examination. If a model claims a specific event occurred in 1987, ask about consequences or related events—hallucinations often lack coherent connections.

Timeline Verification: Check whether claimed events, publications, or developments align temporally. Hallucinations sometimes place events anachronistically or violate causal ordering.

Strategy 3: Confidence and Uncertainty Analysis

Note Qualification Language: Genuine expertise acknowledges uncertainty and limitations. Excessive confidence without caveats suggests potential hallucination, especially for complex or controversial topics.

Ask About Confidence: Explicitly request the model to assess its confidence and explain reasoning. While models can hallucinate confidence assessments, prompting reflection sometimes surfaces uncertainty.

Check for Hedging Patterns: Models trained to avoid hallucination often include careful qualification language (“based on my training data,” “typically,” “generally”). Lack of any hedging in factual claims may indicate hallucination.

Vagueness as Red Flag: Conversely, hallucinations sometimes feature vague, general statements that sound authoritative but lack specificity, allowing the model to avoid verifiable claims while maintaining confident tone.

Strategy 4: Domain Expert Review

Subject Matter Expert Verification: For specialized domains (medical, legal, technical), have qualified experts review LLM outputs. Domain expertise enables spotting subtle hallucinations that general users might miss.

Professional Tools and Databases: Use specialized verification tools and professional databases in relevant fields. Medical information should be checked against databases like UpToDate or peer-reviewed journals, not just general web search.

Community Verification: Technical communities like Stack Overflow, GitHub, or specialized forums can help verify programming solutions and technical claims. If a supposedly common solution has no community discussion, it may be hallucinated.

Red Team Testing: Deliberately try to elicit hallucinations through challenging prompts about obscure topics, edge cases, or information likely missing from training data. Understanding a model’s hallucination patterns improves detection.

Strategy 5: Technical Detection Methods

Retrieval-Augmented Generation (RAG): Implement systems that ground LLM responses in retrieved documents from verified sources. The model generates responses based on explicitly provided documents rather than relying solely on training memory.

Uncertainty Quantification Tools: Use emerging tools that estimate model uncertainty, flagging low-confidence outputs for human review. While imperfect, these provide additional signals about potential hallucinations.

Fact-Checking APIs: Integrate automated fact-checking services that compare LLM claims against structured knowledge bases and fact-checking databases, flagging potential inconsistencies.

Output Monitoring: For production applications, implement logging and monitoring systems tracking LLM outputs, enabling pattern analysis to identify systematic hallucination issues.

Strategy 6: Prompt Engineering for Reduced Hallucination

Request Sources: Explicitly ask the model to provide sources or acknowledge when information is uncertain. Prompts like “cite sources for factual claims” or “indicate confidence level” sometimes reduce hallucinations.

Provide Context: Supply relevant documents or information in the prompt rather than relying on the model’s training memory. This grounds responses in verifiable material.

Specify Uncertainty Handling: Instruct the model to acknowledge knowledge gaps rather than guessing. Prompts like “If you don’t have reliable information, say so rather than guessing” help, though they don’t eliminate hallucinations.

Constrain Output: Request specific output formats requiring structured information that’s easier to verify. Asking for bullet points with sources for each claim facilitates checking compared to narrative responses.

Preventing and Mitigating LLM Hallucination

While eliminating hallucinations entirely remains an open research challenge, multiple strategies can significantly reduce hallucination rates and limit their impact.

Approach 1: Retrieval-Augmented Generation (RAG)

RAG systems retrieve relevant documents from verified knowledge bases before generating responses, grounding outputs in authoritative sources rather than relying on training memory.

How RAG Works:

- Convert user query into search query

- Retrieve relevant documents from curated knowledge base

- Include retrieved documents in prompt context

- Generate response based on retrieved information

- Provide citations to source documents

Benefits for Hallucination Reduction:

- Grounds responses in verifiable sources

- Enables knowledge updates without retraining

- Provides clear attribution for claims

- Reduces reliance on potentially faulty training memory

Limitations:

- Retrieval quality critically affects performance

- Poorly maintained knowledge bases introduce errors

- Models may still hallucinate details not explicit in retrieved documents

- Increased computational cost and latency

Implementation Considerations: RAG requires building and maintaining high-quality document collections, implementing effective retrieval systems (often using vector databases), and designing prompts that properly utilize retrieved information.

Approach 2: Chain-of-Thought and Verification Prompting

Encouraging models to show reasoning steps and verify conclusions can reduce certain hallucination types by making the generation process more deliberate.

Chain-of-Thought Prompting: Request step-by-step reasoning before final answers. This technique, detailed in research by Google, improves reasoning quality and sometimes surfaces potential hallucinations as models “think through” responses.

Self-Consistency Checking: Generate multiple reasoning paths for the same question, then identify most common answer. If five independent reasoning chains produce four different answers, this signals potential hallucination.

Verification Prompts: After generating a response, prompt the model to critique its own answer, check for errors, or verify claims. While models can hallucinate during verification too, this additional step catches some hallucinations.

Approach 3: Fine-Tuning and Training Improvements

Organizations deploying LLMs can reduce hallucinations through specialized training:

Domain-Specific Fine-Tuning: Training models on curated, high-quality domain-specific data improves accuracy within that domain. Medical LLMs fine-tuned on peer-reviewed literature hallucinate less on medical topics than general models.

Uncertainty Training: Explicitly train models to express uncertainty and refuse to answer when appropriate. This requires training datasets including examples where correct response is “I don’t know” or “I’m uncertain.”

Factual Consistency Training: Train models specifically on tasks requiring factual consistency and citation accuracy, penalizing hallucinations during training process.

Reinforcement Learning Adjustments: Modify RLHF to explicitly reward factual accuracy and penalize hallucinations. This requires raters with domain expertise and access to verification resources.

Approach 4: Multi-Model Validation

Using multiple independent models or model versions provides cross-validation opportunities:

Consensus Checking: Query multiple models or the same model multiple times. Agreement across independent generations increases confidence; disagreement signals potential hallucination.

Specialized Model Collaboration: Use general models for broad queries and specialized models for domain-specific verification. For medical questions, cross-check general LLM outputs against specialized medical AI systems.

Version Comparison: Compare outputs across model versions. Consistent answers across GPT-3.5, GPT-4, and Claude provide stronger confidence than outputs from a single model.

Approach 5: Human-in-the-Loop Systems

Critical applications require human oversight:

Expert Review Workflows: Route LLM outputs through subject matter experts who verify factual accuracy before information reaches end users. This catches hallucinations before they cause harm.

Confidence-Based Routing: Automatically route low-confidence outputs to human reviewers while allowing high-confidence responses through with less oversight. Calibrating these confidence thresholds requires careful tuning.

Feedback Loops: Systematically collect user feedback identifying hallucinations, using this data to improve prompts, retrieval systems, and training approaches over time.

Tiered Verification: Implement multiple verification levels based on stakes. Low-stakes applications like creative writing assistance need minimal verification; high-stakes medical or legal applications require extensive checking.

Approach 6: Output Formatting and Attribution

How information is presented affects hallucination impact:

Mandatory Citations: Configure systems to require source citations for factual claims. While models may still hallucinate sources, this requirement at least prompts attempts at attribution.

Confidence Indicators: Display confidence levels alongside outputs, helping users calibrate trust. Visual indicators (color coding, confidence scores) make uncertainty salient.

Disclaimer Language: Include clear disclaimers about AI limitations and hallucination risks, especially for critical domains. Set appropriate user expectations.

Structured Output Formats: Use templates requiring specific formats that facilitate verification. Structured data with clear fields is easier to validate than narrative text.

The Future of LLM Hallucination Research

Addressing hallucinations remains a central challenge in AI research, with multiple promising directions under active investigation.

Emerging Technical Approaches

Grounded Language Models: Research focuses on architectures that maintain explicit connections between generated text and source materials. These models track provenance for each generated token, enabling verification and reducing untraceable hallucinations.

Uncertainty-Aware Architectures: Next-generation models may incorporate built-in uncertainty estimation, explicitly representing confidence in different knowledge domains and alerting users to high-uncertainty outputs.

Retrieval-Native Models: Rather than retrofitting retrieval onto existing LLMs, new architectures design retrieval as core functionality, fundamentally integrating knowledge retrieval into the generation process.

Formal Verification Integration: Combining LLMs with formal verification systems from software engineering could enable automatic checking of logical consistency and factual accuracy for certain claim types.

Improved Evaluation Methods

Hallucination Benchmarks: Researchers develop specialized benchmarks measuring hallucination rates across diverse domains, enabling systematic comparison of mitigation strategies and tracking progress over time.

Automated Detection Systems: Machine learning systems trained specifically to detect hallucinations in LLM outputs could provide real-time monitoring and quality control for production applications.

Domain-Specific Metrics: Generic hallucination metrics fail to capture domain-specific concerns. Specialized evaluation frameworks for medical, legal, technical, and other high-stakes domains provide more meaningful assessment.

Regulatory and Standardization Efforts

AI Safety Standards: Regulatory bodies increasingly recognize hallucination risks, with emerging standards requiring hallucination testing and mitigation for certain AI applications.

Transparency Requirements: Regulations may mandate disclosure of known hallucination rates and limitations for AI systems deployed in critical domains, enabling informed risk assessment by users and regulators.

Liability Frameworks: Legal frameworks governing liability for AI-generated hallucinations continue evolving, potentially creating incentives for improved hallucination mitigation.

Interdisciplinary Collaboration

Cognitive Science Integration: Understanding human reasoning errors and uncertainty representation informs better AI architectures. Cognitive science research on how humans avoid confabulation provides design insights.

Philosophy of Knowledge: Epistemological frameworks help clarify what “knowledge” means for AI systems and how to distinguish justified true belief from plausible confabulation.

Domain Expert Partnership: Close collaboration between AI researchers and domain experts in medicine, law, science, and other fields produces more effective mitigation strategies tailored to domain-specific risks.

Frequently Asked Questions About LLM Hallucination

What causes LLM hallucination in AI models?

LLM hallucination stems from fundamental architecture choices in language models. These systems predict statistically likely text based on training patterns without verifying factual accuracy. Key causes include training data limitations, pressure to always generate complete responses, lack of truth verification mechanisms, overconfidence in predictions, and prompt ambiguity forcing assumptions.

How can I detect if an LLM is hallucinating?

Detect LLM hallucination through multiple strategies: verify cited sources against authoritative databases, check for internal contradictions across multiple queries, request confidence levels explicitly, have domain experts review technical content, test consistency by asking questions multiple ways, and watch for suspiciously specific details without attribution or excessive confidence without qualification.

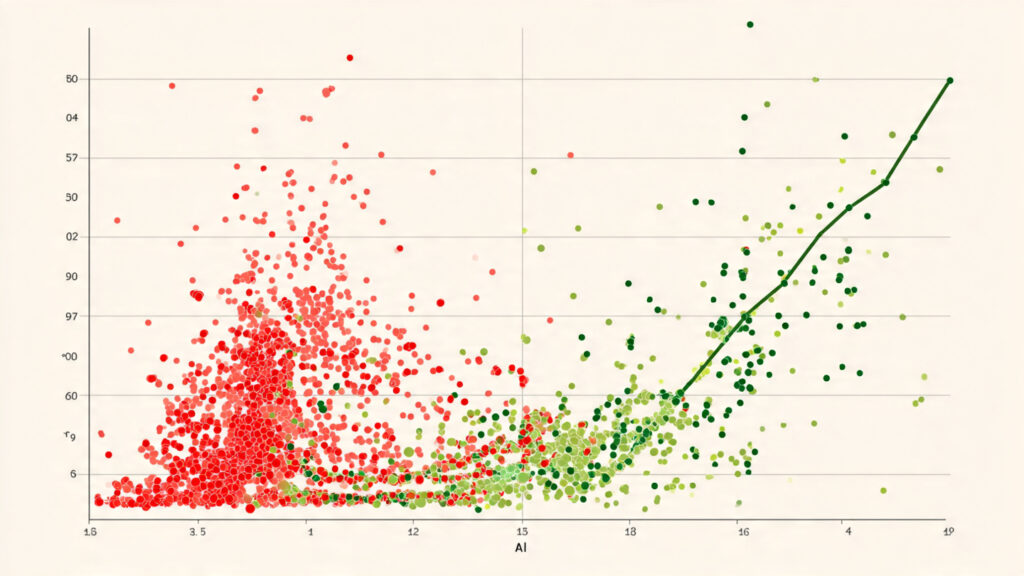

Are newer AI models like GPT-4 less prone to hallucination?

Newer models generally hallucinate less frequently than predecessors, but hallucinations haven’t been eliminated. GPT-4 shows improvement over GPT-3.5, particularly in maintaining consistency and following instructions, but still produces confident false information in 3-15% of responses depending on domain and task complexity. Hallucination remains an active limitation even in state-of-the-art models.

Can LLM hallucination be completely eliminated?

Current architectures cannot completely eliminate LLM hallucination. The fundamental next-token prediction mechanism lacks truth verification. However, combining multiple mitigation strategies—retrieval-augmented generation, improved training, human oversight, and uncertainty quantification—can reduce hallucination rates significantly. Future architectures may address these limitations more fundamentally.

Why do LLMs hallucinate citations and references?

LLMs hallucinate citations because training data contains extensive citation patterns. Models learn structural formats (author names, journals, dates, citation styles) and generate novel combinations following these patterns without verifying actual existence. Training on millions of real citations teaches formats without teaching the distinction between real and fabricated references.

Which domains are most affected by LLM hallucination?

Medical, legal, and scientific domains face highest hallucination risks due to specialized knowledge requirements and serious consequences of errors. Historical information, technical documentation, statistical data, and biographical details also frequently trigger hallucinations. Conversational and creative applications experience fewer critical impacts since factual accuracy matters less.

How does prompt engineering reduce LLM hallucination?

Prompt engineering mitigates hallucinations by providing clear context, requesting sources explicitly, specifying uncertainty handling (“say ‘I don’t know’ rather than guessing”), including relevant documents directly in prompts, constraining output formats, and breaking complex queries into verifiable sub-questions. While helpful, prompt engineering alone cannot eliminate hallucinations completely.

What is the difference between hallucination and creativity in LLMs?

Both involve generating novel content, but creativity produces intentionally imaginative outputs while hallucination involves unintentionally false information presented as fact. Creative writing explicitly operates in fictional space; hallucinations present invented content as factual. The key distinction lies in context and framing—creative outputs are understood as imaginative, while hallucinations deceive by appearing factual.

Conclusion: Living with LLM Hallucination

Understanding LLM hallucination fundamentally changes how we interact with AI language models. These powerful systems generate impressively human-like text through sophisticated pattern matching, but this same capability produces confident fabrications when knowledge gaps arise. Hallucinations aren’t occasional bugs—they’re inherent consequences of current architectures that predict statistically likely text without verifying truth.

The technical mechanisms behind LLM hallucination—training data limitations, completion pressure, context constraints, overconfidence, and lack of verification systems—reveal why eliminating hallucinations entirely remains unsolved. Models don’t “know” facts or “understand” truth; they sample from learned probability distributions over text continuations. High probability doesn’t guarantee accuracy, just statistical plausibility based on training patterns.

Recognition of these limitations enables responsible AI usage. Critical applications demand verification workflows: checking citations against databases, cross-referencing multiple sources, employing domain experts for review, implementing retrieval-augmented systems grounding responses in verified documents, and maintaining human oversight for high-stakes decisions. Creative and exploratory applications tolerate more hallucination risk, but even casual users benefit from understanding when to trust versus verify LLM outputs.

The future promises incremental improvements through better architectures, enhanced training methods, retrieval integration, and uncertainty quantification. However, expecting perfect reliability from current language models invites disappointment and potential harm. Instead, leverage LLMs’ remarkable capabilities—rapid ideation, writing assistance, code scaffolding, explanation generation, creative exploration—while maintaining appropriate skepticism and verification practices.

Your relationship with AI language models should mirror how you’d work with a knowledgeable but occasionally overconfident colleague: value their contributions, verify important claims, understand their limitations, and recognize when expert consultation is necessary. As these systems evolve, hallucination will likely decrease but never vanish entirely—making your understanding of these mechanisms increasingly valuable as AI integrates more deeply into professional and personal workflows.

The question isn’t whether LLMs hallucinate—they do, and will continue to—but how we build systems, workflows, and mental models that harness their power while protecting against their weaknesses. Armed with this understanding of hallucination mechanisms, detection strategies, and mitigation approaches, you’re prepared to use language models effectively while avoiding their most serious pitfalls.