Supervised vs Unsupervised Learning: Complete Guide with Real-World Examples

Understanding the Foundation of Machine Learning

Machine learning powers countless technologies we use daily—from Netflix recommendations to spam filters, medical diagnosis systems to financial fraud detection. At the heart of these applications lie two fundamental approaches: supervised learning and unsupervised learning. Understanding the distinction between these methods is essential for anyone working with data science, artificial intelligence, or machine learning applications.

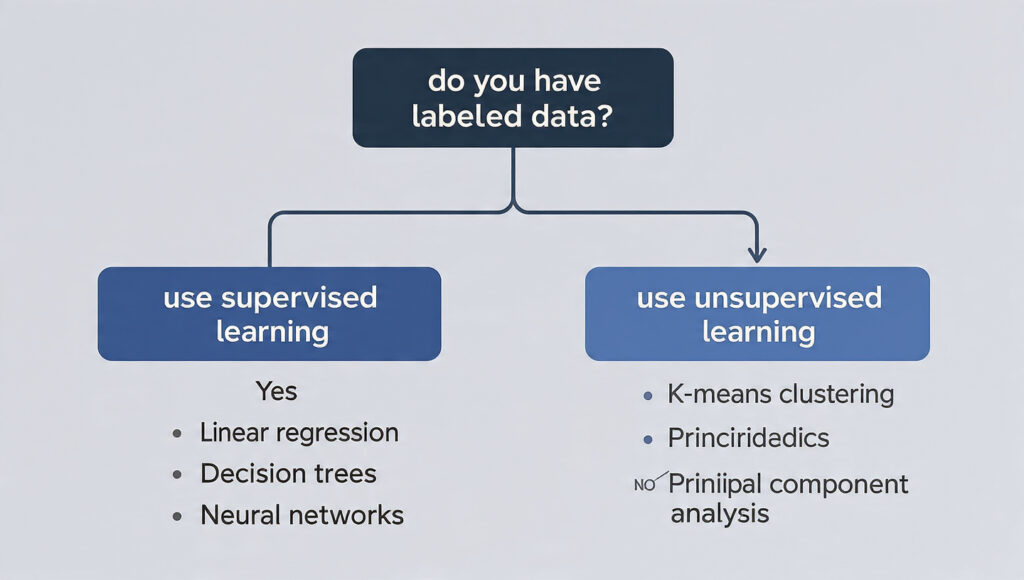

The difference between supervised and unsupervised learning fundamentally comes down to one factor: labeled training data. Supervised learning uses datasets where correct answers are provided, allowing algorithms to learn from examples. Unsupervised learning works with unlabeled data, discovering hidden patterns without predefined categories. This seemingly simple distinction creates vastly different capabilities, applications, and challenges.

What is Supervised Learning?

Supervised learning represents the most intuitive approach to machine learning—teaching algorithms through examples with known correct answers. Imagine teaching a child to identify animals by showing pictures and providing labels: “This is a dog,” “This is a cat,” “This is a bird.” The child learns to recognize features distinguishing each animal. Supervised learning operates identically, learning patterns from labeled training data.

The Core Mechanism of Supervised Learning

Training Process:

- Provide labeled data: Each training example includes input features and the correct output (label)

- Algorithm learns patterns: The model identifies relationships between inputs and outputs

- Make predictions: Apply learned patterns to new, unseen data

- Measure accuracy: Compare predictions against known correct answers

- Adjust and improve: Refine the model based on errors

Key Characteristic: Every training example comes with a “teacher’s answer”—the correct label or value the algorithm should predict. This supervision guides learning, hence the name “supervised learning.”

Types of Supervised Learning

Classification: Predicting discrete categories or classes. Examples include spam detection (spam/not spam), disease diagnosis (positive/negative), or image recognition (cat/dog/bird). The output is a categorical label.

Regression: Predicting continuous numerical values. Examples include house price prediction, temperature forecasting, or stock price estimation. The output is a number on a continuous scale.

Real-World Example 1: Email Spam Detection

The Problem: Email providers need to automatically identify spam messages to protect users from unwanted content and potential security threats.

Supervised Learning Solution:

Training Data Collection: Gather thousands of emails, each labeled as “spam” or “legitimate” by human reviewers. This creates the supervised dataset with known correct answers.

Feature Extraction: Convert emails into measurable features:

- Presence of specific words (“congratulations,” “winner,” “click here”)

- Sender domain characteristics

- Email structure (HTML complexity, number of links)

- Frequency of capital letters and exclamation marks

- Presence of attachments

Model Training: Feed labeled examples to algorithms like Naive Bayes or Support Vector Machines. The model learns which feature patterns correlate with spam versus legitimate email.

Prediction: When new email arrives, extract features and apply the trained model. The algorithm predicts “spam” or “legitimate” based on learned patterns.

Real Implementation: Gmail’s spam filter uses supervised learning trained on billions of labeled emails. The system achieves over 99.9% accuracy, correctly identifying spam while rarely misclassifying legitimate messages.

Why Supervised Learning Works Here: Clear binary classification (spam/not spam), abundant labeled data from user reports, and measurable success criteria make this ideal for supervised approaches.

Real-World Example 2: Medical Diagnosis – Diabetes Prediction

The Problem: Healthcare providers want to identify patients at high risk for diabetes to enable early intervention and prevention.

Supervised Learning Solution:

Training Data: Medical records from thousands of patients, each labeled with diabetes diagnosis (positive/negative). Records include:

- Blood glucose levels

- BMI (Body Mass Index)

- Age and family history

- Blood pressure

- Physical activity levels

- Cholesterol levels

Model Training: Algorithms like Random Forests or Logistic Regression learn which combinations of health indicators predict diabetes likelihood.

Clinical Application: When examining a new patient, input their health metrics into the trained model. The system predicts diabetes risk with associated probability, helping physicians make informed decisions about testing and intervention.

Real Impact: The Pima Indians Diabetes Database, widely used in medical ML research, demonstrates supervised learning achieving 75-80% accuracy in diabetes prediction, enabling earlier diagnosis than traditional methods alone.

Why Supervised Learning Works Here: Historical medical records provide labeled outcomes (who developed diabetes), measurable clinical features create strong predictive signals, and clear classification criteria enable accurate evaluation.

Real-World Example 3: House Price Prediction

The Problem: Real estate platforms need to estimate property values for buyers, sellers, and investors without manual appraisal for every property.

Supervised Learning Solution:

Training Data: Historical sales data with actual sold prices (labels) and property characteristics:

- Square footage and lot size

- Number of bedrooms and bathrooms

- Location (neighborhood, zip code)

- Age and condition

- Nearby amenities (schools, parks, transit)

- Recent comparable sales

Model Training: Regression algorithms like Linear Regression, Gradient Boosting, or Neural Networks learn relationships between property features and sale prices.

Price Estimation: Input characteristics of any property, and the model predicts expected market value based on learned patterns from thousands of actual sales.

Real Implementation: Zillow’s Zestimate uses supervised learning trained on millions of home sales. While imperfect, the system provides instant valuations with median error rates around 2-3% for on-market homes.

Why Supervised Learning Works Here: Abundant historical sales data with actual prices, quantifiable property features, and continuous output values make regression algorithms highly effective.

Real-World Example 4: Credit Card Fraud Detection

The Problem: Financial institutions must identify fraudulent transactions in real-time among millions of legitimate purchases daily.

Supervised Learning Solution:

Training Data: Transaction history labeled as fraudulent or legitimate, including:

- Transaction amount and merchant type

- Location and time

- Distance from cardholder’s usual locations

- Purchase frequency patterns

- Online versus in-person

- Historical fraud patterns

Model Training: Algorithms like XGBoost or Deep Neural Networks learn subtle patterns distinguishing fraud from normal behavior.

Real-Time Detection: Each transaction runs through the model within milliseconds. Suspicious transactions trigger additional verification or blocking.

Real Implementation: Major credit card companies like Visa and Mastercard use supervised learning detecting fraud with over 99% accuracy while minimizing false positives that inconvenience legitimate customers.

Why Supervised Learning Works Here: Clear labeled outcomes (confirmed fraud/legitimate), rich transaction features, and measurable cost of errors justify sophisticated supervised approaches.

What is Unsupervised Learning? Discovering Hidden Patterns

Unsupervised learning takes a fundamentally different approach—finding structure in data without predefined labels or correct answers. Think of organizing a large photo collection without any existing categories. You might naturally group images by content, color schemes, or composition without anyone telling you how to categorize them. Unsupervised learning operates similarly, discovering inherent patterns and structures.

The Core Mechanism of Unsupervised Learning

Discovery Process:

- Provide unlabeled data: Input data contains no predetermined categories or correct answers

- Algorithm explores structure: The model identifies patterns, relationships, or groupings naturally present in data

- Reveal hidden insights: Discover clusters, associations, or dimensional structure humans might miss

- Interpret results: Domain experts analyze discovered patterns for business or scientific insights

Key Characteristic: No “teacher” provides correct answers. The algorithm independently discovers what’s meaningful in the data based on inherent structure and statistical properties.

Types of Unsupervised Learning

Clustering: Grouping similar data points together based on feature similarity. Examples include customer segmentation, document organization, or gene expression analysis.

Dimensionality Reduction: Reducing data complexity while preserving important information. Techniques compress high-dimensional data into lower dimensions for visualization or preprocessing.

Association Rule Learning: Discovering interesting relationships between variables. Used for market basket analysis and recommendation systems.

Anomaly Detection: Identifying unusual patterns that don’t conform to expected behavior, useful for fraud detection and system monitoring.

Real-World Example 1: Netflix Viewer Segmentation

The Problem: Netflix serves hundreds of millions of subscribers with diverse tastes. How can they understand viewer preferences without manually categorizing every user?

Unsupervised Learning Solution:

Unlabeled Data: Viewing behavior data without predetermined categories:

- Watch history (titles, completion rates)

- Viewing times and binge-watching patterns

- Genre interactions

- Search behavior

- Rating patterns (when provided)

Clustering Algorithm: K-means clustering or hierarchical methods group users with similar viewing patterns, discovering natural segments like:

- “Action Movie Enthusiasts” who predominantly watch thrillers and action

- “Documentary Bingers” who consume non-fiction content regularly

- “Family Viewers” who watch children’s content and family movies

- “International Content Fans” who prefer foreign language shows

Business Application: These discovered segments inform content acquisition, recommendation algorithms, marketing campaigns, and interface personalization—all without Netflix manually defining viewer types beforehand.

Why Unsupervised Learning Works Here: No predefined “correct” way to categorize viewers, natural groupings emerge from behavior patterns, and discovered segments provide actionable business insights.

Real-World Example 2: Retail Customer Segmentation

The Problem: Large retailers want to understand customer diversity to tailor marketing, but manually categorizing millions of customers is impossible.

Unsupervised Learning Solution:

Unlabeled Data: Purchase history and behavior without predetermined segments:

- Purchase frequency and recency

- Average transaction value

- Product categories purchased

- Seasonal buying patterns

- Channel preferences (online/in-store)

- Response to promotions

Clustering Analysis: Algorithms automatically discover distinct customer groups:

- “High-Value Loyalists” – frequent purchasers, high spending, brand loyal

- “Bargain Hunters” – purchase only during sales, price-sensitive

- “Occasional Shoppers” – infrequent purchases, moderate spending

- “New Explorers” – recent customers, diverse product sampling

Marketing Application: Each discovered segment receives tailored campaigns, product recommendations, and promotional strategies based on their behavior patterns, dramatically improving conversion rates.

Real Implementation: Amazon uses unsupervised learning for customer segmentation, discovering hundreds of micro-segments that inform personalization strategies contributing to their recommendation engine’s effectiveness.

Why Unsupervised Learning Works Here: Customer diversity creates natural groupings, no predetermined “correct” segments exist, and discovered patterns reveal insights humans might overlook.

Real-World Example 3: Genomic Data Analysis

The Problem: Researchers studying gene expression need to identify patterns among thousands of genes across different conditions without knowing what patterns exist.

Unsupervised Learning Solution:

Unlabeled Data: Gene expression measurements across samples:

- Expression levels for thousands of genes

- Measurements from healthy and diseased tissues

- Different developmental stages or treatment conditions

Clustering and Dimensionality Reduction: Algorithms like Principal Component Analysis (PCA) and hierarchical clustering reveal:

- Genes with similar expression patterns (potentially functionally related)

- Sample groupings (disease subtypes not previously recognized)

- Major sources of variation in the dataset

Scientific Discovery: These unsupervised analyses have identified cancer subtypes, revealed drug mechanism insights, and discovered gene regulatory networks—all without predefined hypotheses about what to find.

Real Impact: The Cancer Genome Atlas project used unsupervised learning to reclassify cancers based on molecular profiles rather than tissue origin, leading to new targeted therapies.

Why Unsupervised Learning Works Here: Biological systems are complex with unknown structure, letting data reveal its own organization yields discoveries, and high dimensionality requires automated pattern detection.

Real-World Example 4: Anomaly Detection in Network Security

The Problem: Cybersecurity systems must identify unusual behavior indicating potential threats, but new attack types constantly emerge that don’t match known patterns.

Unsupervised Learning Solution:

Unlabeled Data: Network traffic and system logs without predetermined “normal” versus “anomaly” labels:

- Connection patterns and data volumes

- Access times and user behaviors

- System resource usage

- Communication protocols

Anomaly Detection Algorithms: Methods like Isolation Forests or Autoencoders learn what “normal” looks like from the data itself, then flag deviations:

- Unusual data exfiltration patterns

- Abnormal login locations or times

- Unexpected system resource consumption

- Novel communication patterns

Security Application: The system automatically identifies suspicious activity without requiring labeled examples of every possible attack type, adapting to new threats.

Real Implementation: Companies like Darktrace use unsupervised learning for network security, detecting zero-day attacks and insider threats by recognizing deviations from learned normal behavior patterns.

Why Unsupervised Learning Works Here: New threats constantly emerge, labeling all attack types is impossible, and the system must detect never-before-seen anomalies.

Real-World Example 5: Document Organization and Topic Modeling

The Problem: Organizations accumulate millions of documents, emails, and reports that need organization without manually categorizing each one.

Unsupervised Learning Solution:

Unlabeled Data: Document collections without predetermined topics or categories:

- Text content from reports, emails, articles

- No predefined subject classifications

- Documents spanning diverse subjects

Topic Modeling: Algorithms like Latent Dirichlet Allocation (LDA) automatically discover topics:

- Identify words that frequently co-occur

- Group documents by discovered topics

- Reveal thematic structure across collections

Discovered Topics Example: Analyzing news articles might reveal:

- Topic 1: Politics (words: election, government, policy, vote)

- Topic 2: Technology (words: software, startup, innovation, digital)

- Topic 3: Sports (words: game, team, score, championship)

- Topic 4: Finance (words: market, stocks, investment, economy)

Business Application: Law firms use unsupervised topic modeling to organize case files, news organizations to categorize content, and researchers to explore literature—all without manual classification.

Why Unsupervised Learning Works Here: Manual categorization is prohibitively expensive, topics naturally emerge from word patterns, and discovered organization provides value without predefined structure.

Key Differences Between Supervised and Unsupervised Learning

Understanding the practical distinctions helps you choose the right approach for your application.

Difference 1: Training Data Requirements

Supervised Learning:

- Requires labeled training data with correct answers

- Labels must be accurate and representative

- Labeling is expensive and time-consuming

- Quality depends heavily on label accuracy

- Example: 10,000 emails each marked spam/legitimate

Unsupervised Learning:

- Works with unlabeled data

- No manual labeling required

- Can process raw data immediately

- Labels aren’t required at all

- Example: 10,000 emails with no classification

Practical Implication: If obtaining labeled data is expensive, difficult, or impossible, unsupervised learning becomes the only viable option.

Difference 2: Objectives and Goals

Supervised Learning:

- Predict specific outcomes on new data

- Classify into predefined categories

- Estimate numerical values accurately

- Minimize prediction errors

- Clear success metrics (accuracy, precision, recall)

Unsupervised Learning:

- Discover hidden patterns and structure

- Group similar items without predefined categories

- Reduce data complexity

- Find associations and anomalies

- Success is more subjective and exploratory

Practical Implication: Choose supervised learning for prediction tasks with clear target variables, unsupervised learning for exploratory analysis and pattern discovery.

Difference 3: Evaluation Methods

Supervised Learning:

- Compare predictions against known correct answers

- Use test datasets with labels held back during training

- Calculate objective metrics: accuracy, F1-score, RMSE

- Clear quantitative performance measures

- Easy to determine if model is “good enough”

Unsupervised Learning:

- No ground truth for automatic evaluation

- Require subjective assessment by domain experts

- Internal metrics (silhouette score, within-cluster variance)

- Success depends on usefulness of discoveries

- Harder to objectively measure quality

Practical Implication: Supervised learning provides clearer performance feedback, while unsupervised learning requires more interpretation and domain expertise.

Difference 4: Use Cases and Applications

Supervised Learning Best For:

- Spam detection and content moderation

- Medical diagnosis and disease prediction

- Credit scoring and fraud detection

- Price estimation and demand forecasting

- Image and speech recognition

- Customer churn prediction

Unsupervised Learning Best For:

- Customer segmentation without predefined groups

- Anomaly and outlier detection

- Data exploration and visualization

- Dimensionality reduction for preprocessing

- Recommendation systems (collaborative filtering)

- Market basket analysis

Practical Implication: The nature of your problem—prediction versus exploration—primarily determines which approach fits best.

Difference 5: Computational Complexity and Cost

Supervised Learning:

- Computationally intensive during training

- Requires significant labeled data acquisition costs

- Fast inference after training

- Clear stopping criteria (validation performance)

- Human labeling is often the bottleneck

Unsupervised Learning:

- Can be computationally expensive (especially clustering large datasets)

- No labeling costs

- May require multiple iterations to find meaningful structure

- Less clear when to stop optimizing

- Computational resources are the main constraint

Practical Implication: Consider total project cost including data collection, not just algorithmic complexity.

Difference 6: Interpretability and Explainability

Supervised Learning:

- Predictions tied to specific labels or values

- Can analyze feature importance for predictions

- Errors are clearly identifiable (wrong predictions)

- Decision boundaries can be visualized

- Easier to explain to stakeholders

Unsupervised Learning:

- Discovered patterns require interpretation

- Results may be ambiguous or subjective

- Multiple valid interpretations possible

- Requires domain expertise to extract insights

- Harder to explain business value immediately

Practical Implication: If stakeholder buy-in requires clear explanations, supervised learning’s interpretability advantages matter significantly.

Supervised Learning Algorithms: Popular Methods

Understanding common supervised learning algorithms helps you select appropriate techniques for your projects.

Classification Algorithms

Logistic Regression: Despite the name, this classifies data into categories using probability-based decision boundaries. Simple, interpretable, and effective for binary classification like spam detection or disease diagnosis.

Decision Trees: Create flowchart-like structures making sequential decisions based on features. Highly interpretable—you can literally draw the decision process. Used extensively in credit approval and medical diagnosis.

Random Forests: Combine multiple decision trees, each trained on random data subsets. More accurate and robust than individual trees, popular for fraud detection and predictive maintenance.

Support Vector Machines (SVM): Find optimal boundaries separating classes in high-dimensional space. Effective for text classification, image recognition, and bioinformatics applications.

Neural Networks: Deep learning models with multiple layers learning complex patterns. Power modern applications like image recognition, natural language processing, and autonomous vehicles.

K-Nearest Neighbors (KNN): Classify based on majority vote of K nearest training examples. Simple but effective for recommendation systems and pattern recognition.

Regression Algorithms

Linear Regression: Model relationships between features and continuous outcomes using linear equations. Widely used for price prediction, sales forecasting, and risk assessment.

Polynomial Regression: Extend linear regression to capture non-linear relationships. Useful when relationships between variables follow curves rather than straight lines.

Ridge and Lasso Regression: Regularized versions preventing overfitting by penalizing complex models. Essential for high-dimensional datasets with many features.

Gradient Boosting: Combine weak predictive models into strong ensemble. XGBoost and LightGBM implementations dominate Kaggle competitions and production systems for tabular data.

Neural Networks for Regression: Deep learning architectures predict continuous values. Used in time series forecasting, energy demand prediction, and scientific modeling.

Unsupervised Learning Algorithms: Popular Methods

Exploring unsupervised learning algorithms reveals diverse approaches to pattern discovery.

Clustering Algorithms

K-Means Clustering: Partition data into K groups by minimizing within-cluster variance. Fast, scalable, and widely used for customer segmentation, image compression, and anomaly detection.

Hierarchical Clustering: Build nested cluster hierarchies revealed through dendrograms. Useful when cluster count is unknown or hierarchical relationships matter, common in genomics and taxonomy.

DBSCAN (Density-Based Spatial Clustering): Identify clusters of arbitrary shape based on density. Effective for spatial data, outlier detection, and datasets with noise.

Gaussian Mixture Models: Probabilistic approach assuming data comes from mixture of Gaussian distributions. Used in image segmentation, speech recognition, and statistical modeling.

Mean Shift: Iteratively shift points toward highest density regions. Effective for image segmentation and object tracking without specifying cluster count.

Dimensionality Reduction Algorithms

Principal Component Analysis (PCA): Transform correlated features into uncorrelated principal components. Widely used for data visualization, noise reduction, and preprocessing before supervised learning.

t-SNE (t-Distributed Stochastic Neighbor Embedding): Visualize high-dimensional data in 2D or 3D while preserving local structure. Popular for exploring complex datasets like gene expression or customer behavior.

UMAP (Uniform Manifold Approximation and Projection): Modern alternative to t-SNE, often faster and better at preserving global structure. Increasingly popular for single-cell genomics and high-dimensional visualization.

Autoencoders: Neural networks learning compressed representations of data. Used for dimensionality reduction, denoising, and anomaly detection in images and time series.

Association Rule Learning

Apriori Algorithm: Discover frequent itemsets and association rules. Classic method for market basket analysis revealing product purchase patterns.

FP-Growth: More efficient alternative to Apriori for large datasets. Used in recommendation systems and pattern mining.

Anomaly Detection Algorithms

Isolation Forest: Identify anomalies by isolating observations through random partitioning. Effective for fraud detection, system monitoring, and quality control.

One-Class SVM: Learn boundary around normal data, flagging outliers. Used in novelty detection and monitoring applications.

Local Outlier Factor (LOF): Detect anomalies by comparing local density to neighbors. Effective for datasets where outliers form small clusters.

Semi-Supervised Learning: Bridging Both Worlds

Real-world scenarios often fall between fully labeled and completely unlabeled data, giving rise to semi-supervised learning that combines both approaches.

What is Semi-Supervised Learning?

Semi-supervised learning uses small amounts of labeled data alongside large amounts of unlabeled data. This hybrid approach leverages the structure in unlabeled data while maintaining supervised learning’s predictive power.

Key Principle: Labeled examples guide learning, while abundant unlabeled data helps identify underlying data structure and improves generalization.

Real-World Example: Medical Image Analysis

The Challenge: Labeling medical images requires expensive expert radiologist time. A hospital might have 100,000 X-rays but only 1,000 with expert diagnoses.

Semi-Supervised Solution:

- Train initial model on 1,000 labeled images (supervised)

- Use model to generate pseudo-labels for unlabeled images

- Include high-confidence pseudo-labeled images in training

- Iteratively refine model using both labeled and pseudo-labeled data

Benefit: Achieve performance approaching fully supervised learning trained on 10,000+ labeled images, at fraction of labeling cost.

Real-World Example: Natural Language Processing

The Challenge: Creating labeled training data for language tasks (sentiment analysis, named entity recognition) requires human annotators reading and labeling thousands of documents.

Semi-Supervised Solution:

Modern language models like BERT use semi-supervised learning:

- Pre-train on massive unlabeled text corpus (unsupervised)

- Learn general language representations

- Fine-tune on small labeled dataset for specific task (supervised)

Benefit: Transfer learning from unlabeled data dramatically reduces labeled data requirements for high performance.

When to Use Semi-Supervised Learning

Ideal Scenarios:

- Labeling is expensive but unlabeled data is abundant

- Initial supervised model shows promise but needs improvement

- Domain has natural progression from unsupervised to supervised learning

- Budget constraints limit labeled data collection

Examples:

- Speech recognition (hours of audio, limited transcriptions)

- Web page classification (billions of pages, limited labels)

- Protein function prediction (massive genomic data, limited experimental validation)

Choosing Between Supervised and Unsupervised Learning

Selecting the right approach depends on your specific problem, available data, and business objectives.

Decision Framework

Choose Supervised Learning When:

✅ You have labeled training data or can acquire it cost-effectively ✅ Your goal is predicting specific outcomes on new data ✅ Clear evaluation metrics exist for success ✅ The problem fits classification or regression frameworks ✅ Accurate predictions justify labeling costs ✅ Historical examples with outcomes are available

Choose Unsupervised Learning When:

✅ Labeled data is unavailable, expensive, or impossible to obtain ✅ Your goal is exploring data and discovering patterns ✅ You want to understand data structure before more specific analysis ✅ Defining relevant categories beforehand is difficult ✅ The problem involves grouping, compression, or anomaly detection ✅ Exploratory analysis will inform future supervised approaches

Consider Semi-Supervised Learning When:

✅ You have small labeled datasets and large unlabeled datasets ✅ Labeling costs are prohibitive for desired dataset size ✅ Pre-trained models exist for transfer learning ✅ Initial supervised performance is insufficient ✅ Domain allows iterative label propagation

Practical Considerations

Budget Constraints: Limited budgets favor unsupervised learning (no labeling costs) or semi-supervised learning (minimal labeling).

Time Constraints: Supervised learning provides faster paths to production if labeled data exists; unsupervised learning requires more exploratory iteration.

Domain Expertise: Interpreting unsupervised learning results requires deep domain knowledge; supervised learning is more accessible to technical teams without domain expertise.

Data Availability: Let your data guide the choice—abundant labels enable supervised learning, sparse labels suggest unsupervised or semi-supervised approaches.

Business Goals: Prediction and automation favor supervised learning; insights and discovery favor unsupervised learning.

Hybrid Approaches in Practice

Many production systems combine both approaches strategically:

Two-Stage Systems:

- Use unsupervised learning for initial data exploration and feature engineering

- Apply supervised learning for final prediction using discovered features

Example: Customer churn prediction might first cluster customers (unsupervised), then train churn classifiers for each cluster (supervised).

Iterative Refinement:

- Start with unsupervised learning to understand data

- Develop hypotheses based on discoveries

- Collect targeted labeled data for hypothesis testing

- Build supervised models for validated patterns

Example: Fraud detection systems use unsupervised anomaly detection to identify suspicious patterns, then manual review creates labels for supervised learning refinement.

Real-World Success Stories

Examining complete applications illustrates how organizations deploy these techniques.

Success Story 1: Spotify’s Music Recommendation

Challenge: Recommend songs to users from catalog of 70+ million tracks, considering diverse tastes and discovery goals.

Solution Combining Both Approaches:

Unsupervised Learning:

- Cluster songs by audio features (tempo, key, loudness, danceability)

- Identify listening patterns and user segments

- Discover emerging music trends without labels

Supervised Learning:

- Predict whether user will like specific songs based on listening history

- Learn from explicit feedback (likes, skips, playlist additions)

- Forecast playlist completion likelihood

Result: Highly personalized recommendations blending exploration (unsupervised discoveries) and exploitation (supervised predictions), contributing to Spotify’s industry-leading engagement.

Success Story 2: Healthcare Diagnosis Support

Challenge: Assist physicians in diagnosing rare diseases where labeled cases are scarce.

Solution Combining Both Approaches:

Unsupervised Learning:

- Cluster patients by symptom profiles

- Identify unusual patient presentations (anomaly detection)

- Reduce high-dimensional medical test results for visualization

Supervised Learning:

- Predict specific diseases based on symptoms and tests

- Classify medical images (X-rays, MRIs, pathology slides)

- Forecast patient risk scores and treatment outcomes

Result: Clinical decision support systems combining both approaches assist physicians in diagnosis, achieving accuracy comparable to specialists in certain domains while flagging unusual cases for deeper investigation.

Success Story 3: E-commerce Optimization

Challenge: Amazon optimizes countless aspects of their platform using machine learning across billions of products and customers.

Solution Combining Both Approaches:

Unsupervised Learning:

- Customer segmentation for targeted marketing

- Product clustering for catalog organization

- Anomaly detection for fraud and abuse

- Trend discovery in purchase patterns

Supervised Learning:

- Product demand forecasting for inventory

- Delivery time prediction

- Click-through rate prediction for search results

- Customer lifetime value estimation

- Review helpfulness classification

Result: Seamless shopping experience where machine learning optimizes everything from search results to delivery logistics, with estimated 35% of revenue attributed to recommendation systems powered by these techniques.

Common Challenges and Solutions

Understanding typical obstacles helps you avoid pitfalls in your machine learning projects.

Challenge 1: Insufficient Labeled Data (Supervised Learning)

Problem: Supervised learning requires substantial labeled training data, but labeling is expensive and time-consuming.

Solutions:

- Active Learning: Strategically select most informative examples for labeling, reducing total labeling needs

- Transfer Learning: Start with pre-trained models from similar domains, fine-tune on limited labels

- Data Augmentation: Artificially expand training sets through transformations (rotating images, paraphrasing text)

- Semi-Supervised Learning: Leverage abundant unlabeled data alongside limited labels

- Crowdsourcing: Use platforms like Amazon Mechanical Turk for cost-effective labeling

Challenge 2: Interpreting Unsupervised Results

Problem: Unsupervised learning discoveries lack inherent meaning—clusters and patterns require interpretation.

Solutions:

- Domain Expert Involvement: Involve subject matter experts in interpreting discovered patterns

- Visualization: Use dimensionality reduction techniques to visualize and understand structure

- Statistical Validation: Apply statistical tests to verify discovered patterns aren’t random

- Business Metrics: Connect discoveries to business KPIs to demonstrate value

- Iterative Refinement: Treat initial results as hypotheses, validate through additional analysis

Challenge 3: Overfitting in Supervised Learning

Problem: Models memorize training data rather than learning generalizable patterns, performing poorly on new data.

Solutions:

- Cross-Validation: Use techniques like k-fold cross-validation to assess generalization

- Regularization: Apply penalties for model complexity (L1/L2 regularization)

- More Training Data: Larger datasets reduce overfitting risk

- Feature Selection: Remove irrelevant features that enable memorization

- Ensemble Methods: Combine multiple models to reduce overfitting tendency

- Early Stopping: Monitor validation performance, stop training when it plateaus

Challenge 4: Choosing Optimal Cluster Count (Unsupervised Learning)

Problem: Many clustering algorithms require specifying number of clusters beforehand, but optimal count is unknown.

Solutions:

- Elbow Method: Plot within-cluster variance versus number of clusters, look for “elbow”

- Silhouette Analysis: Measure how similar objects are within clusters versus other clusters

- Domain Knowledge: Use business understanding to guide reasonable cluster counts

- Hierarchical Clustering: Explore dendrograms to identify natural cut points

- Multiple Values: Try several values, evaluate business usefulness of each

Challenge 5: Class Imbalance (Supervised Learning)

Problem: When one class dominates (99% legitimate emails, 1% spam), models may ignore minority class.

Solutions:

- Resampling: Oversample minority class or undersample majority class

- Synthetic Data: Generate synthetic minority examples (SMOTE algorithm)

- Cost-Sensitive Learning: Assign higher penalties to minority class misclassification

- Anomaly Detection: Treat rare class as anomaly detection problem

- Ensemble Methods: Use algorithms robust to imbalance like Random Forests

Future Trends in Supervised and Unsupervised Learning

Machine learning continues evolving rapidly, with emerging trends reshaping both approaches.

Self-Supervised Learning

Modern AI increasingly uses self-supervised learning—creating supervised tasks from unlabeled data. Language models like GPT predict next words (supervision created automatically from text), enabling learning from massive unlabeled corpora.

Impact: Blurs distinction between supervised and unsupervised learning, enabling better performance with less manual labeling.

Few-Shot and Zero-Shot Learning

Advanced models learn new tasks from minimal examples (few-shot) or even task descriptions alone (zero-shot), reducing supervised learning’s data requirements dramatically.

Application: Deploy models to new domains without expensive data collection and labeling.

Automated Machine Learning (AutoML)

Tools automatically select algorithms, tune parameters, and engineer features, making both supervised and unsupervised learning more accessible to non-experts.

Impact: Democratizes machine learning, allowing business analysts and domain experts to build effective models without deep technical expertise.

Explainable AI (XAI)

Growing emphasis on interpretable models and explanation methods helps users understand both supervised predictions and unsupervised discoveries.

Importance: Builds trust, enables debugging, and satisfies regulatory requirements in high-stakes domains like healthcare and finance.

Federated Learning

Train models across decentralized data without centralizing sensitive information, enabling supervised learning while preserving privacy.

Application: Healthcare institutions collaborate on diagnostic models without sharing patient data; smartphones improve prediction without uploading personal information.