Why AI Chat Tools Give Wrong Answers and How to Reduce Them

Why AI chat tools give wrong answers is one of the most common questions users ask today—and for good reason. These tools feel intelligent, confident, and fast. Yet, at times, they provide responses that are incomplete, outdated, misleading, or simply incorrect.

At first glance, this seems confusing. After all, AI chat tools are trained on massive amounts of data and use advanced machine learning models. So why do mistakes still happen?

The answer lies not in a single flaw, but in a combination of how AI is trained, how it predicts text, and how humans interact with it. More importantly, many of these errors can be reduced significantly if users understand the limitations and learn how to work with AI more effectively.

This article explores the real reasons behind AI inaccuracies and, more importantly, explains how to reduce wrong answers in practical, everyday use.

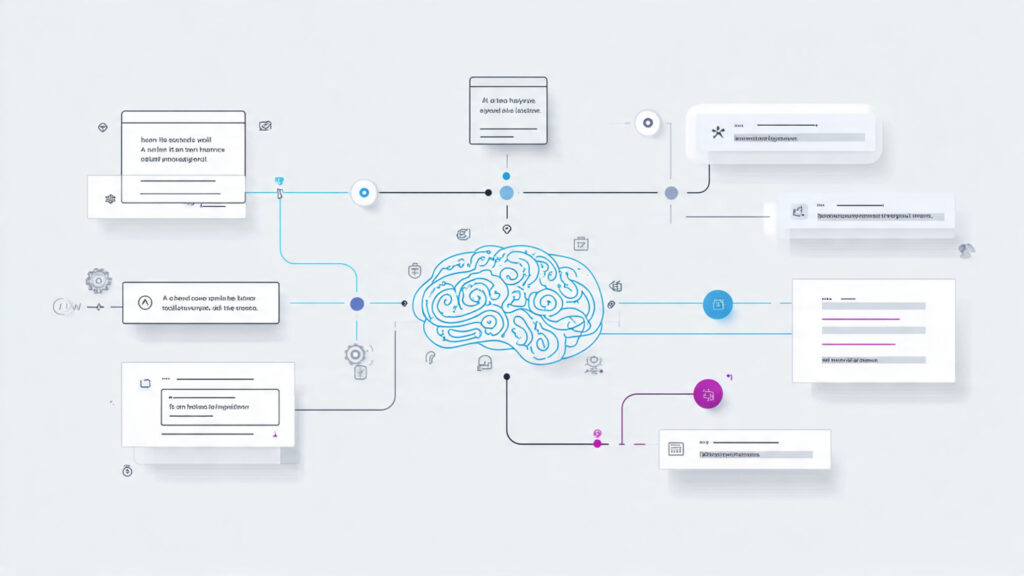

How AI Chat Tools Actually Work (Not How We Think They Work)

Before understanding mistakes, it’s essential to understand how AI chat tools generate answers.

AI chat systems do not “know” facts in the human sense. Instead, they predict the most likely next word based on patterns learned from data. In other words, they generate responses based on probability, not understanding.

As a result:

- AI does not verify facts in real time

- AI does not reason like a human expert

- AI does not truly understand truth or falsehood

Because of this, an answer that sounds correct may still be wrong.

Reason 1: AI Predicts Language, Not Truth

One major reason AI chat tools give wrong answers is that they are designed to produce plausible text, not guaranteed facts.

When you ask a question, the AI predicts what a helpful answer should sound like. If the training data contains mixed, outdated, or conflicting information, the model may produce an answer that appears confident but lacks accuracy.

Therefore, confidence in tone should never be mistaken for correctness.

Reason 2: Training Data Has Limits and Gaps

AI models are trained on vast datasets—but vast does not mean complete or perfect.

Common data limitations include:

- Missing niche or regional knowledge

- Outdated information

- Overrepresentation of popular topics

- Underrepresentation of specialized domains

As a result, AI may struggle with:

- New laws or policies

- Recent scientific findings

- Local business or academic rules

- University-specific or company-specific procedures

Consequently, answers may be partially correct but contextually wrong.

Reason 3: Ambiguous or Vague User Prompts

Another overlooked reason AI chat tools give wrong answers is unclear user input.

AI cannot ask clarifying questions the way humans naturally do unless prompted. Therefore, when a question is vague, the model fills in assumptions—which may not match user intent.

For example:

- Asking “Explain inheritance” could mean biology, OOP, or law

- Asking “Is this correct?” without context forces guessing

Because of this, vague prompts almost always increase error rates.

Reason 4: Hallucinations in AI Responses

One of the most dangerous failure modes of AI chat tools is hallucination.

AI hallucination occurs when a model:

- Invents facts

- Creates fake citations

- Produces nonexistent definitions

- Assembles incorrect logic confidently

Importantly, hallucinations are not intentional. They happen because the model is trying to be helpful, even when it lacks reliable information.

This is why AI may:

- Cite research papers that don’t exist

- Attribute quotes to the wrong person

- Explain concepts inaccurately but fluently

Reason 5: Overgeneralization From Patterns

AI learns from patterns. However, patterns do not always apply universally.

When an AI sees similar examples repeatedly, it may assume a rule applies everywhere—even when exceptions exist. As a result, answers become generally correct but specifically wrong.

This is especially common in:

- Legal explanations

- Medical advice

- Academic grading policies

- Software behavior across versions

Therefore, AI responses should never replace expert validation in critical domains.

Reason 6: Lack of Real-World Context

AI chat tools operate without direct awareness of real-world situations. They do not know:

- Your environment

- Your institution’s rules

- Your project constraints

- Your personal background

Because of this, advice may be theoretically sound but practically unusable.

For example, AI may suggest tools, workflows, or solutions that are unavailable in your country, outdated in your system, or incompatible with your requirements.

Reason 7: Bias in Training Data

Bias in data leads to bias in answers.

If training data overrepresents certain viewpoints, cultures, or technologies, AI responses may reflect that imbalance. While safeguards exist, bias cannot be eliminated completely.

As a result:

- Some perspectives may be oversimplified

- Minority viewpoints may be missing

- Cultural assumptions may appear in answers

This is another reason AI output must be evaluated critically.

How to Reduce Wrong Answers From AI Chat Tools

Now that the causes are clear, let’s focus on how to reduce AI mistakes effectively.

1. Ask Clear, Specific Questions

The most effective way to reduce errors is to improve prompt clarity.

Instead of:

“Explain sorting”

Ask:

“Explain merge sort in C++ with time complexity and example”

The more context you provide, the fewer assumptions AI has to make.

2. Add Constraints to Your Prompt

Constraints guide AI behavior and reduce randomness.

Helpful constraints include:

- Target audience

- Output format

- Assumptions

- Domain or field

For example:

“Explain inheritance in OOP for second-semester C++ students using simple language.”

This dramatically improves accuracy.

3. Ask for Step-by-Step Reasoning

When AI explains its reasoning, errors become easier to detect.

Prompts like:

- “Explain step by step”

- “Show reasoning”

- “Break this into clear steps”

help prevent logical jumps and hallucinations.

4. Request Sources or Verification

While AI may not always provide perfect sources, asking for them increases reliability.

For example:

“Explain this and mention where this concept is commonly used.”

This encourages grounded responses rather than speculative ones.

5. Cross-Check Critical Information

AI should be treated as a support tool, not a final authority.

Always verify:

- Medical information

- Legal explanations

- Academic rules

- Financial advice

Using AI as a first draft or idea generator is far safer than treating it as a final decision-maker.

6. Use AI Iteratively, Not Once

One powerful technique is iterative prompting.

First response → refine → clarify → correct

For example:

“That explanation seems vague. Rewrite it with a real-world example.”

This conversational refinement significantly improves quality.

7. Know When NOT to Use AI

Finally, knowing the limits matters.

AI should not replace:

- Professional judgment

- Expert diagnosis

- Ethical decisions

- High-stakes approvals

Understanding this boundary prevents misuse and disappointment.

The Human Role in AI Accuracy

AI accuracy is not only a technical issue—it is also a human interaction issue.

When users:

- Ask better questions

- Apply critical thinking

- Validate results

- Understand limitations

AI becomes far more reliable and useful.

In contrast, blind trust almost guarantees mistakes.

Conclusion: Using AI Wisely, Not Blindly

Understanding why AI chat tools give wrong answers helps users shift from frustration to control.

AI is not broken—it is predictive, probabilistic, and imperfect. However, when used correctly, it becomes an incredibly powerful assistant for learning, productivity, and problem-solving.

The key is simple:

AI works best when humans guide it intelligently.

By improving prompts, applying verification, and respecting limitations, users can dramatically reduce errors and unlock the real value of AI chat tools.